Research Article

Research Article

Detection of Images Generated by Artificial Intelligence, Literacy Visual and Disinformation

Miguel Domínguez Rigo* and María Ángeles Alonso Garrido

Detection of Artificial Intelligence Generated Images, Visual Literacy and Disinformation, Complutense University of Madrid, Spain

Miguel Domínguez Rigo, Detection of Artificial Intelligence Generated Images, Visual Literacy and Disinformation, Complutense University of Madrid, Spain

Received Date:September 23, 2025; Published Date:September 26, 2025

Summary

The rapid advances in generative artificial intelligence (AI) in image generation in recent years pose a challenge when it comes to detecting and identifying artificially generated synthetic images. Through a comparative study between two selected groups of individuals (one with a higher level of visual literacy than the other) to whom we showed two images, one real and one generated using AI, we obtained results that demonstrate the real difficulty both groups have demonstrated, regardless of their level of visual literacy, in correctly detecting the origin of the images. The degree of realism in images created by AI is such that those with a higher level of visual literacy also have trouble distinguishing between the real image and the synthetic one. We conclude that visual literacy is still necessary, but in the current context, it may be insufficient. It would be advisable to integrate greater digital and media skills, as well as visual literacy that integrates new strategies related to new technologies, helping to detect the misinformation that the misuse of artificial intelligence can generate.

Abstract

The dizzying progress of generative artificial intelligence in image generation in recent years poses a challenge when it comes to detecting and identifying synthetic images that have been artificially generated. Through a comparative study between two selected groups of people (one with a higher level of visual literacy than the other) to whom we showed two images, one real and the other generated by artificial intelligence, we obtained results that show the real difficulty that both groups, regardless of their level of visual literacy, had in correctly identifying the origin of the images. The degree of realism in the AI-generated images is such that those with higher levels of visual literacy also have problems distinguishing between the real and synthetic image. We conclude that visual literacy is still necessary, but may not be sufficient in the current context, and that it is appropriate to integrate greater digital and media literacy, as well as visual literacy that integrates new strategies related to new technologies, to help detect the misinformation that an improper use of artificial intelligence can generate.

Keywords:Disinformation; Artificial Intelligence; Visual Literacy; Detection; Deepfake

Introduction and Experiment Design

Fake content and AI-generated images

We are interested in highlighting the role of artificial intelligence in static and dynamic images, where in a matter of months, we have seen tremendous advances that have led to this technology’s rapid development, especially in the audiovisual field. The second decade of this century is proving to be crucial in the development and use of artificial intelligence, which has become democratizedand popularized. During 2024, we have seen how this technology is becoming more present in people’s lives, and access to multiple ways of creating, manipulating, and transforming images has become easier without the need for any technical knowledge. However, we are only in the initial phase of development, and therefore, much greater progress is expected in the coming years.

Mobile phones have become indispensable devices. Human communication, whether we like it or not, is closely linked to the internet and social media, especially among young people, who widely use these devices and the Internet to inform themselves and communicate. The veracity of the information and content we receive through digital environments is intimately connected to the use of images, consumed daily like never before in our history. Anyone can create adulterated or false content and disseminate it quickly and in real time from their mobile phone, even using artificial intelligence applications, already integrated into many of the new devices or available in open access.

Misinformation is a reality, and the consequences for our society can be very serious, especially for younger people, who are much more accustomed to using new technologies, social media, and the internet. Measures must be taken to ensure that the acceptance of misinformation and the lack of interest in truthfulness leave the internet. New developments related to artificial intelligence can be very useful and largely used to our benefit, but they can also be seriously harmful. In the case of images generated by artificial intelligence, they can make it difficult to distinguish between a true image and a false one, and consequently, between true information or content and false content.

Already at the end of the last century, some authors, such as D.A. Dondis, J. Debes, and R. Arnheim, laid the foundations for visual literacy. A visually literate person has the ability to interpret, understand, and analyse the creations, objects, and symbols (whether natural or artificial) present in their daily environment, including artistic, visual, and audiovisual expressions [1]; in short, they can acquire a critical perspective on the real world.

Thus, to the recommendations that Caro [2] collected several years ago about the need for digital literacy, we can add the need for visual and media literacy adapted to the new challenges:

From the perspective of the Internet user or recipient (it must not be forgotten that the recipient is also a potential disseminator), a series of measures must be developed, such as cultivating critical distrust of the information received, in addition to diversifying information sources and contrasting facts and opinions. Digital literacy is thus presented as an unavoidable necessity to help young people unravel and reflect on the codes inherent to the Internet. (p. 196)

In this regard, we seek to determine whether the aspects related to the naked eye detection of images created or manipulated through mechanical or artificial means and their relationship with the level of visual literacy have changed. Whereas not many years ago it could be intuited that a higher level of visual literacy [3] contributed to the identification of false and manipulated images and, therefore, to the detection of misinformation associated with these types of images, currently, through this study, we observe the possibility that there may be considerable difficulty in identifying the production of manipulated images or images generated entirely by artificial intelligence, due to the rapid advancement and sophistication of this technology.

Fake content and images generated by artificial intelligence

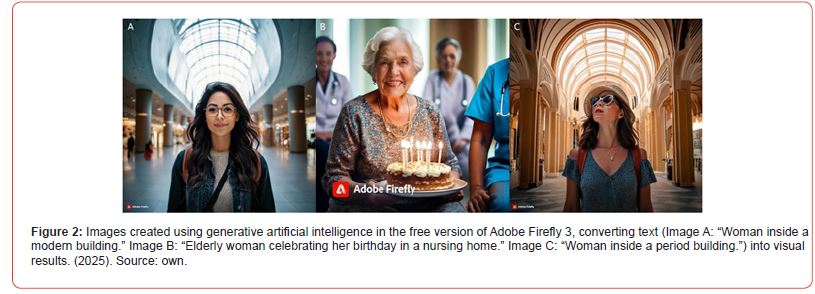

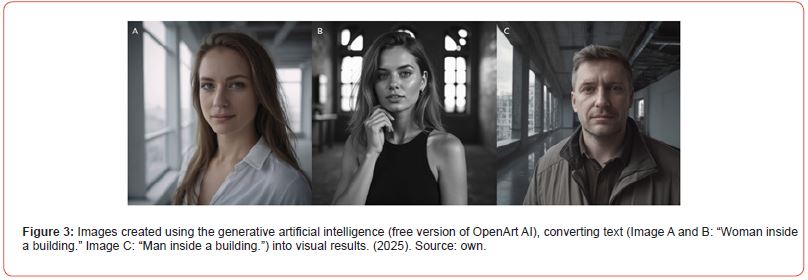

It is possible to generate a realistic image in seconds, more specifically, the time it takes to describe the image we want to create using text. This series of textual descriptions or instructions(prompts) will provide the system with the necessary information to generate the image. As an example, we have generated a series of images (Figures 2-4) in less than sixty seconds using different generative artificial intelligence models: Adobe Firefly 3 (free version), OpenArt AI (free version), and Midjourney 6.0 (paid version). While some people might guess at first glance that these images have been created using artificial intelligence, the variety and rapid growth of available models and tools are enabling a constant improvement in the quality and realism of the images, making this recognition difficult. The same is true for the production of realistic videos generated using artificial intelligence.

This simplicity in the use of artificial intelligence has made it easier to generate fake content than authentic content; it is no longer necessary to obtain or try to find a real image to support a text; today, both the image and the text can be artificially created. Social media spread images and videos generated by artificial intelligence on a daily basis—synthetic images that, for the most part, do not warn or inform the user of their origin or authorship. For example, many social media accounts use images that are not real, yet are presented as truthful, as a claim. They are sometimes accompanied by text that reinforces the image or seeks to appeal to primal emotions. These images, by provoking an emotional experience in the subject, allow a rapid and deep identification with the message they transmit, as well as a resistance to the arguments that might want to question them [4]. In this way, contents are created that are false, intentional lies that are not detected by the user and that, in turn, are shared. The proliferation of this type of deceptive content is alarming.

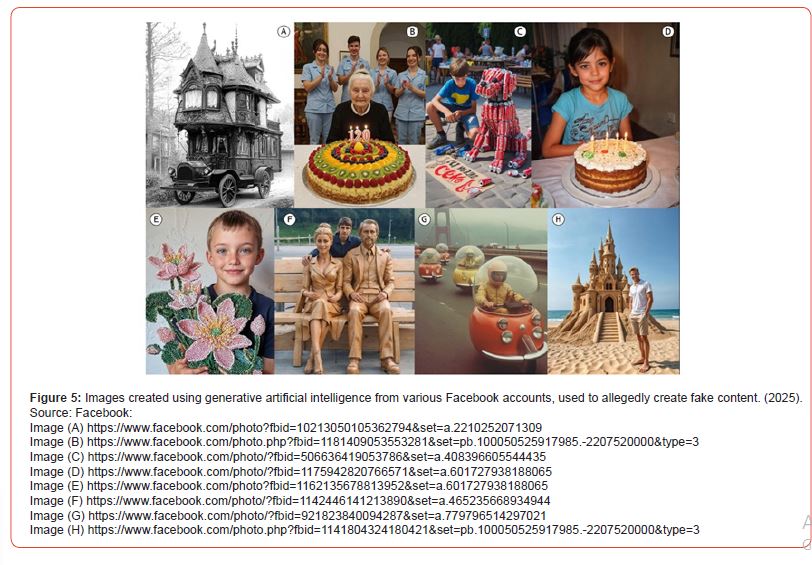

To illustrate this reality, we have selected a small sample of this fraudulent content present on Facebook in Figure 5. For example, the text associated with image 5A describes how, in the 20th century, the arrival of motor vehicles began to replace traditional horse-drawn caravans; however, interest in the ornate and elegant design characteristic of the 19th century remained. Along these same pseudo-informative lines is image 5G, showing the “ Peel Trident”: a two-seater microcar model manufactured by the Peel company. Engineering during the years 1965 and 1966, which has three wheels (although in the image we can only see one front wheel and space for a single driver). The vehicle referred to in the text accompanying the image is not the one stated, which has two front wheels and is a two-seater. Image 5B is shown with the following text: “Today is my dear grandmother’s birthday. Please don’t leave without blessing her!” We can see an elderly woman with a birthday cake and candles that suggest she is turning 120, but there is no verified data on a woman who exceeded this age, the person in the image being apparently younger. Something similar happens with image 5D, which shows the following message: “This year she turns 3. She has a cake, but no friends, no one to greet her!” Although the girl in the image clearly appears to be over 4 years old (the number of candles on the cake), at the time we evaluated this post, it had already generated more than 3,703 interactions and 892 comments. The posts shown in images 5C, 5E, 5F, and 5H are similarly motivated, eliciting responses and interactions with similar text, such as the one accompanying the child in image 5E: “Work by seven-year-old George, a little sad because no one had a nice word to say about my work or even said hi.” This content, at the time of evaluation, was shared 4,042 times, generating more than 129,000 interactions and 35,900 comments. Most of the comments associated with this fake content, far from highlighting the deception, demonstrate that the posts are considered real and truthful by the people who have interacted with them.

The purpose of this misleading or false content is to generate interactions, the more the better, regardless of the reactions they may generate. The intention is for these images to be shared, commented on, get likes, etc. We are, therefore, facing a new, entirely fictitious context, whose sole objective is to generate impact, agitation, or move the audience, without any ethical or moral considerations. This is a deliberate distortion, a deception, which is normalized on the internet and disconnected from the real world, where this type of behaviour would be condemned much more strongly. The legitimization of lies is a reality; behind the anonymity of many of these accounts are hidden intentions that have little or nothing to do with the creation or dissemination of real content, the dissemination of information, or knowledge. Franganillo [5] warned about the possibilities for generating hoaxes or frauds using this technology:

However, this technology also carries significant ethical and social risks; as it advances, the potential for deception and other dangers increase. Artificial content is so believable that it can confuse society in the absence of a code of conduct. If many people already struggle to debunk a hoax or deception with simple evidence, the picture painted by this growing sophistication is even more daunting and worrying. (p. 11)

From the high number of interactions and comments on some of the aforementioned publications (Figure 6), we could deduce that many of these contents arouse some interest in users or, at least, achieve the purpose of influencing them, but this is due, in part, to the fact that false contents are shared more than genuine ones and not exclusively because they are in line with our line of thinking, as stated by Juárez [6]:

We have also observed how people’s behaviour changes when they read news with a certain emotional tone. If it also has a high number of replies or likes, users feel the need to share it without verifying the information. They simply consider it true, either because the source it comes from seems of quality; or because the number of interactions the message has is high and that seems sufficient for them to consider it truthful; or because it comes from their personal network of contacts and they accept the news shared by their loved ones and do not question this information; or because of the need to feel integrated into this virtual social tide. (p. 279)

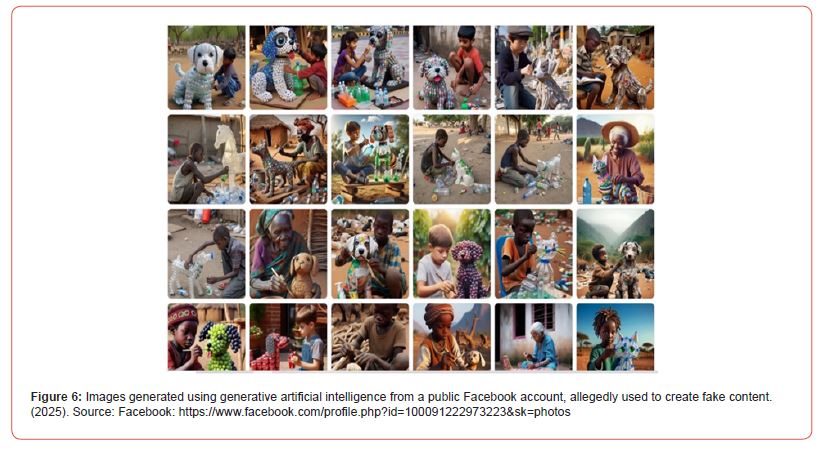

We know that many people use social media for information and that Facebook is one of the most popular social media platforms for adults. However, Facebook and other major social media platforms lack effective measures to regulate and detect misinformation. In fact, Facebook founder Mark Zuckerberg recently announced that he is discontinuing the anti-hoax fact-checking program on Facebook and Instagram, justifying this by arguing that objectively verifying the veracity of content could be considered a form of censorship. He also argued that those tasked with this task tend to incorporate their own biases [7]. In this context, and due to the extremely high speed of propagation, it is advisable, before disseminating any apparently true or legitimate content, to try to determine whether we are dealing with false information, deliberately distorted information, poor-quality content, sensationalist news, hoaxes, etc. To do this, accessing the source, verifying the news, or searching for the origin through other means is vitally important to prevent its amplification on the internet. Sometimes, verifying content is as simple as accessing the account’s public profile and viewing the images displayed. As an example, we show some of the images that can be seen on one of the Facebook profiles that served as our previous example (Figure 5, image C), noting that all of them were generated by artificial intelligence (Figure 6).

One thing to keep in mind is that people tend to consider that deepfakes (literally meaning “ultra-false”: image, video or voice files manipulated by generative artificial intelligence that are extraordinarily believable) influence others more than themselves, because we systematically overestimate ourselves and believe ourselves to be more capable of distinguishing the false from the real than they are. This self-perception makes us more susceptible to being influenced by such content and, curiously, it is even more intensified among those with high cognitive ability [8,9].

Many artificial intelligence systems are human-dependent, meaning they are not autonomous. While there is information about self-learning and independent artificial intelligence, it is human nature that can create or use this technology for unethical purposes.

Methodology

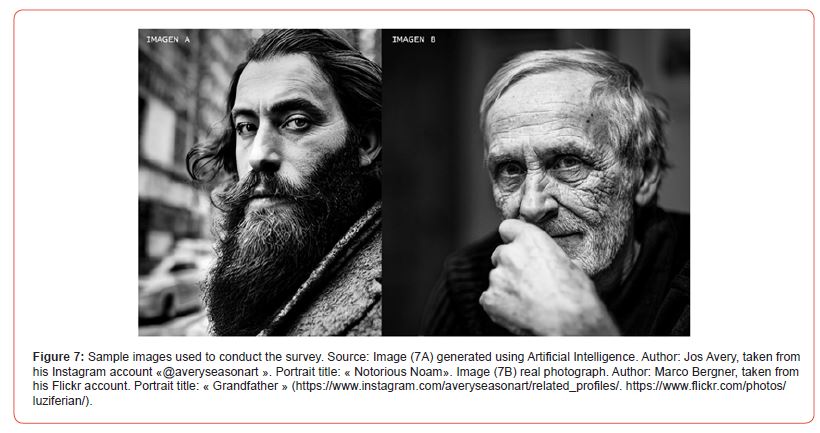

For the study, two similar images (Figure 7) were selected, each showing black and white portraits of two different people. The first image was created using artificial intelligence, while the second image is a real photograph. The “authorship” of the first image (7A) is attributed to Jos Avery, who became popular for showing on his Instagram account (@averyseasonart) numerous spectacular black and white portraits created using the artificial intelligence program Midjourney and subsequently retouched by him, without revealing the origin of the images (he even stated on his account that he had used a Nikon D810 to take the photographs), which were not recognized as artificial by his numerous followers. The second image (7B) belongs to Marco Bergner, a current self-taught German photographer who shows his work through Flickr, a website that allows storing and sharing photographs, and his website (https:// mbergner-foto.de/).

The comparative study was conducted with 280 individuals, selected using non-probability discretionary sampling. Two groups of people were selected and shown both images (Figure 7) during January 2024 via an online survey. A first group of 120 people, referred to as Group 1 AV, had a higher level of visual literacy, while a second group of 160 people, referred to as Group 2, did not show evidence of any significant visual literacy due to their education, experience, or profession, as was assumed in Group 1 AV. The members of Group 1 AV were selected based on criteria that supposedly gave them a greater ability to interpret images more thoroughly and accurately, such as being teachers in the area of plastic and visual arts, experts in design and visual communication, plastic and visual artists, graphic designers, photographers, people with specific training, etc., people accustomed to reading images and working with them. Both groups were unaware of the purpose of the survey they received. They accessed it through a link (a Google Forms questionnaire), which only asked them to answer 4 questions and indicate their age numerically. No time limit was set for completing the survey, but once completed, they could not access it again.

Group 1 AV consisted of people aged between 18 and 70. By age group, the first group (18 to 30 years) included 18 people, the second group (30 to 50 years) included 51 people, and the third group (50 to 70 years) included 51 people.

Group 2 consisted of people aged between 19 and 65. By age group, we counted 112 people in the first group (18 to 30 years old), 26 people in the second group (30 to 50 years old), and 22 people in the third group (50 to 70 years old).

The same four questions were posed to both control groups, with both images shown simultaneously (Figure 7). The subject had to choose which of the items seemed correct: number 1, number 2, number 3, or number 4.

1. Image A has been generated with artificial intelligence.

2. Image B has been generated with artificial intelligence.

3. Neither of the two images has been generated with artificial intelligence.

4. Both images have been generated with artificial intelligence.

Results

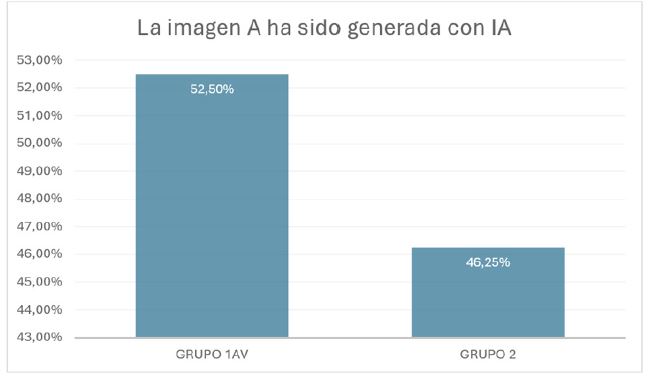

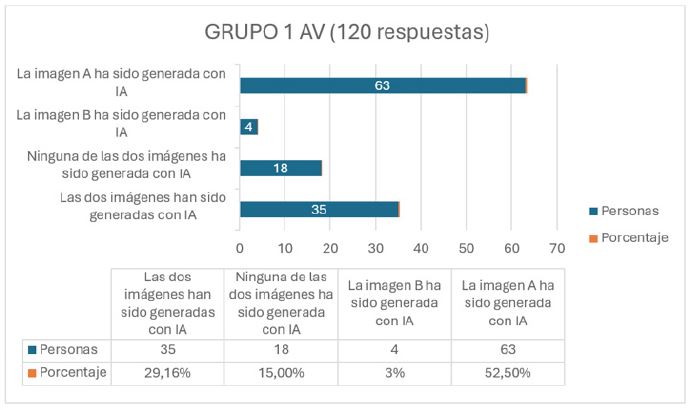

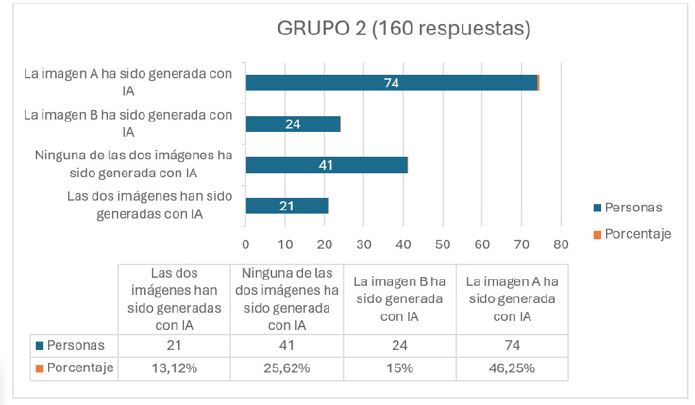

Regarding the first question posed, we can determine that the majority of respondents in both groups recognize the artificially created image. However, although, as already noted, we do not observe a large percentage difference, this minimal difference may indicate that a higher level of visual literacy (AV group 1) can lead to a slight improvement in the detection of elements present in images generated with artificial intelligence, thus helping to reveal their origin. Respondents in both groups agree on a similar percentage regarding the origin of the image generated entirely by artificial intelligence (image 7A), although the percentage that correctly identifies the image is slightly higher (6.3%) among those with a higher level of visual literacy (Table 1).

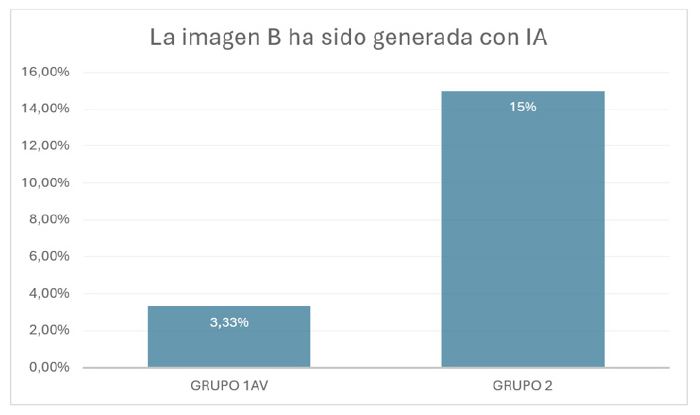

Regarding the second question, regarding whether image 7B (a real image) was created synthetically, only 3.3% of Group 1 AV considered that this image was generated using artificial intelligence, while this percentage rose to 15% in Group 2. This difference of almost 12 percentage points shows that Group 2 is more likely to assume that the authentic image shown is explicitly identified as fictitious, while in Group 1 AV, more people distinguish the elements typical of a real photographic image. However, this difference of 12 points is not sufficiently significant (Table 2).

Table 1: Graph corresponding to the responses obtained linked to question no. 1.

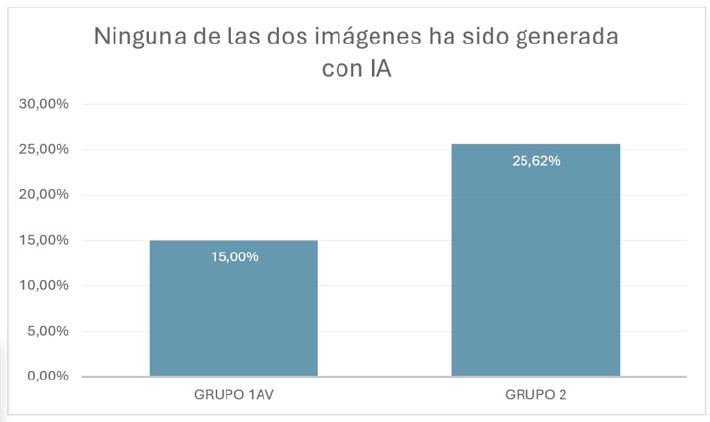

Regarding the third alternative presented, where we propose that neither of the two images has been generated through artificial intelligence, 15% of group 1 AV considers that both images are real, while this percentage rises to 25.62% in group 2. In this sense, this difference of just over 10 percentage points shows that group 2 is slightly more confident than group 1 AV about the legitimacy of both images (Table 3).

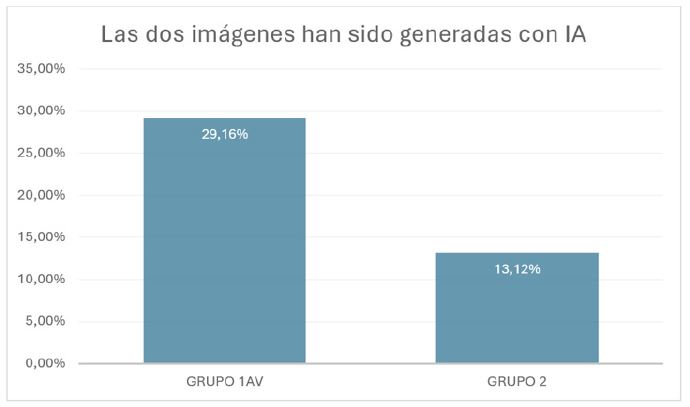

It is in the fourth question where the difference between the two groups is greatest, with a gap of 16 percentage points. Regarding the suspicion that one of the two images shown could have been created entirely by artificial intelligence, 29.16% of group 1 AV considered the possibility that both images were generated by artificial intelligence, while only 13.12% of group 2 considered this option. Although we cannot confirm this, it is possible that group 1 AV, having greater knowledge about the hyper realistic creation possibilities of artificial intelligence systems, may have distrusted the authenticity of both images equally (Table 4).

The following graphs show the responses obtained so that we can observe them jointly in both groups, group 1 AV (Table 5) and group 2 (Table 6):

Table 2: Graph corresponding to the responses obtained linked to question no. 2.

Table 3: Graph corresponding to the responses obtained linked to question no. 3.

Table 4: Graph corresponding to the responses obtained linked to question no. 4.

Table 5: Graph corresponding to the responses obtained linked to group 1 AV

Table 6:Graph corresponding to the responses obtained linked to group 2.

Discussion

There are hardly any publications of similar previous studies by other authors with which we can compare the results. There is some research on the detection of images created by artificial intelligence or the recognition of deepfakes , such as that carried out by Sanchez -Acedo, Carbonell-Alcocer, Gertrudix and Rubio-Tamayo [10], that of Ahmed [8], that of Gutiérrez-Manjón and Castillejo-de-Hoces [10] or that of Yucra -Mamani, Torres-Cruz and Aragón-Cruz [11], but none of them take into account the degree of visual literacy of the participants when analysing their ability to detect the images.

The data obtained, despite slightly leaning in favour of better recognition of images generated with artificial intelligence in the group with a higher level of visual literacy, are not conclusive. To obtain more definitive results, it would be advisable to increase the number of people surveyed in both groups and conduct inferential statistical analyses. Significantly expanding the sample size and segmenting it equally by age group could yield more precise data. We also believe it is advisable to implement improvement strategies that help us more accurately determine which people do or do not have a higher level of visual literacy, perhaps by conducting a preselection using a methodology that provides greater objectivity. We also believe it might be advisable to increase the number of images, establishing the same categories: images generated by artificial intelligence and real photographs, but determining a higher minimum number of images created by different generative artificial intelligence models and real photographs from different authors.

No time limit was set for respondents to answer the questions posed due to the technical difficulty involved, but for greater accuracy in the results, establishing a time limit for viewing the images and another for responding to the proposed questions would provide a more consistent framework for participants.

Ultimately, by improving these procedures, we could move from describing possible observations or trends to estimating population parameters and making informed predictions. However, we maintain that it is feasible to continue this study by expanding and improving the design of the methodology to be followed, determining it in a more comprehensive and systematic manner.

Conclusion

By now, there is no doubt about the serious dangers that the inappropriate or malicious use of artificial intelligence can pose. An ethical and anthropocentric approach must be guaranteed to prevent this exceptional development we are witnessing from being used maliciously. We can cite, as an example, the European Union’s interest in establishing a regulatory framework for its use, which is partly due to the detection of potential risks associated with this technology. On March 13, 2024, the European Parliament passed a historic law: the EU Artificial Intelligence Regulation, the first legislation worldwide on artificial intelligence, with the aim of ensuring that AI systems operate safely, ethically, and reliably. It came into force on August 1 of the same year [12]. We are beginning to experience the consequences of a new era, where there are no guidelines or criteria to delimit or regulate bad practices, as many of the consequences of this harmful use are being perceived at the same time as artificial intelligence is developing.

Visual literacy is still necessary in an eminently audiovisual world. However, before the arrival of artificial intelligence, itcould guarantee a high success rate in detecting manipulations, images taken out of context, framing modifications, identifying elements of graphic language, etc. However, currently, visual literacy, although, as we have pointed out, remains essential and highly recommended, becomes, considering the preliminary results obtained, insufficient on its own and as we know it to confront the complexity of a phenomenon as new as it is disturbing. If our fears are confirmed, new approaches and developments around visual literacy are necessary so that it can adapt to the new realities given rise to artificial intelligence.

The problem we face is very complex, as the most worrying aspect is not the development of artificial intelligence, but rather its fraudulent use, which is perceived as a real risk that can create various challenges associated with disinformation. Artificial intelligence clearly represents advances in many fields, and in audiovisual production, it can be of great support, speeding up processes, reducing costs, developing creative environments, and generating new ideas. However, it can also significantly contribute to disinformation through the creation of false images. Although any image taken out of context, distorted, or manipulated, whether real or not, can generate disinformation, the processes of creating false images have been facilitated and popularized with the evolution and development of generative artificial intelligence applied to images. Currently, it is much more complicated to identify images generated by this technology, which has been refined in a very short time, reaching a level of complexity and realism that was unthinkable just three years ago.

Image generative tools continue to improve their results. Knowledge about those image components that were valid for identifying images generated through artificial intelligence (image style, textures, lights and shadows, brightness, creation of hands and fingers, integration of the various elements, definition, etc.) are no longer effective; they have become obsolete in the face of synthetic photographic hyperrealism, which is a reality today.

The most relevant initial conclusion we have been able to draw from this study is that artificial intelligence is capable of creating images so realistic that they are not detected or identified as artificial by humans, even if they possess a higher degree of visual literacy. It is possible, based on the results, that adequate visual literacy contributes to an improvement in their detection, but this advantage is too slight to be considered significant enough. The advancement of this technology is such that currently, visual literacy, although advantageous, is insufficient on its own and must be complemented by media and digital literacy, as well as a pedagogy focused on communication and greater education in critical thinking and meaning. This educational challenge must also include the moral aspects that arise from the systematic acceptance and justification of lies on the internet, as well as a process of creating new strategies around visual literacy to adapt it to the growth and sophistication of new technologies. For example, educating people to approach images by conceiving of them as created or used by a messenger, rather than as autonomous documents, allows viewers to better understand how to evaluate the message and what actions to take (Bock, 2023).

Without transparent, ethical, safe, and responsible development of this technology, without specific regulation, and without comprehensive education and literacy training for society, we will leave the proper use of artificial intelligence in the hands of users. Otherwise, the representation of gender and racial stereotypes, the generation of misinformation and manipulation, use for identity theft, extortion, intimidation, hate speech, harassment, and defamation will continue to be some of the threats arising from the misuse of generative artificial intelligence.

We should join forces to prevent images, which have so often been valuable allies of truth, from becoming a means of hiding or distorting reality. Losing the legitimacy of images as a means of revealing the world around us means losing our direction and orientation in a scenario as complex and turbulent as the current one [13-17].

Acknowledgment

None.

Conflict of Interest

No conflict of interest.

References

- Villamar JA, Revuelta F, Acosta M, Rivera Mieles AB (2021) Visual Literacy. Proceedings of Design 37: 142–147.

- Caro Samada MC (2015) Information and truth in the use of social networks by part of adolescents. Educational Theory Magazine Interuniversitaria 27(1): 187-199.

- Domínguez Rigo M (2020) Visual literacy as a Visual literacy as a defence against fake news. Journal of Learning Styles 13(26): 85–93.

- Gómez-de-Ágreda, Ángel Feijóo C, Salazar-García IA (2021) A new taxonomy of the use of images in shaping Digital storytelling interested party. Deep fakes and artificial intelligence. Information Professional 30(2).

- Franganillo J (2023) Generative artificial intelligence and its impact on content creation media methods. science journal social 11(2): m231102a10.

- Juárez Escribano B (2021) Impact and social diffusion of post-truth and fake news in social environments virtual. Miguel Hernández Communication Journal 12(1): 267-283.

- Kleinman Z (2025) Instagram and Facebook remove the fact - checkers as did X after being bought by Elon Musk. BBCNews.

- Ahmed S (2023) Examining public perception and cognitive biases in the presumed influence of deepfakes threats: empirical evidence of third person perception from three studies. Asian Journal of Communication 33(3): 308-331.

- Köbis NC, Doležalová B, Soraperra I (2021) Fooled twice: People cannot detect deepfakes but think they can. Iscience 24(11).

- Gutiérrez-Manjón S, Castillejo-de- Hoces B (2023) The future of visual literacy: Evaluating image detection generated by Artificial intelligence. Hipertext.net (26): 37-46.

- Yucra-Mamani YJ, Torres-Cruz F, Aragón-Cruz WE (2024) Visual perception of photographs on social media. real and synthesized through AI. VISUAL REVIEW. International Visual Culture Review 16(4): 197–212.

- European Commission (2024) The Artificial Intelligence Regulation enters into force.

- Bock MA (2023) Visual media literacy and ethics: Images as affordances in the digital public sphere. First Monday 28(7).

- Fernández García N (2017) Fake news: An opportunity for literacy media. New Society (269): 66-77.

- González Arencibia M, Martínez Cardero D (2020) Ethical dilemmas in the artificial intelligence scenario. Economía Y Sociedad 25(57): 1-18.

- Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024 the one that is established rules harmonized in the field of artificial intelligence and by the one that is modified the Regulations (EC) No 300/2008, (EU) No 167/2013, (EU) No 168/2013, (EU) 2018/858, (EU) 2018/1139 and (EU) 2019/2144 and Directives 2014/90/EU, (EU) 2016/797 and (EU) 2020/1828 (Artificial Intelligence Regulation). Journal Official Journal of the European Union, L 2024/1689, of 12 July 2024.

- Sanchez-Acedo A, Carbonell-Alcocer A, Gertrudix M, Rubio-Tamayo JL (2024) The challenges of media and information literacy in the artificial intelligence ecology: deepfakes and misinformation. Communication & Society 37(4): 223-239.

-

Miguel Domínguez Rigo* and María Ángeles Alonso Garrido. Detection of Images Generated by Artificial Intelligence, Literacy Visual and Disinformation. Iris J of Edu & Res. 5(4): 2025. IJER.MS.ID.000614.

-

Crossword puzzle exercises, Game-playing learning method, Motivation to learn

-

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.