Review Article

Review Article

From Design to Emotional and Ethical Enhancement in Human-Robot Interaction: A Systematic Review

Waleed M Al Nuwaiser*

Computer Science Department, College of Computer and Information Sciences, Imam Mohammad Ibn Saud Islamic University (IMSIU), Riyadh, 11623, Saudi Arabia

Corresponding AuthorComputer Science Department, College of Computer and Information Sciences, Imam Mohammad Ibn Saud Islamic University (IMSIU), Riyadh, 11623, Saudi Arabia

Received Date: March 20, 2025; Published Date: March 27, 2025

Abstract

Forty studies on AI-driven human-robot interaction examine design methods that promote affective responsiveness and ethical behavior in sociotechnical contexts. Healthcare is the focus of 22 studies, followed by education with 14 studies and elderly care with 13. Additionally, many reports examine industrial and organizational contexts. Of the reviewed papers, 26 examine emotional aspects, 27 concentrate on ethical considerations, and 13 investigate both concurrently. Research in the emotional domain outlines systems that utilize emotion recognition and adaptive response mechanisms to enhance user engagement and trust. Voice assistants capable of recognizing and responding to emotions can enhance user happiness, despite their ability to accurately identify only approximately 50% of high-arousal signals [1]. Simultaneously, ethical design is promoted through frameworks that prioritize fairness, privacy, transparency, and human-centered values. Studies indicate that methods combining feminist design principles with ethical assessment frameworks can mitigate bias and protect data privacy in various applications, including oncology chatbots and socially assistive robots (SARs). These studies delineate specific design principles-adaptive behavior, empathy-driven interfaces, and transparent algorithms-that enhance effective and ethically responsible human–robot interaction.

Introduction

The incorporation of artificial intelligence (AI) into humanrobot interaction (HRI) represents a significant field of research, especially within social and technical contexts were emotional intelligence and ethical factors are essential. Recent studies indicate that AI-driven systems can markedly improve cognitive functions and emotional interactions when developed with humancentered principles [2, 3]. Despite advancements in technological capabilities, existing emotion presentation systems exhibit limited capabilities. Research indicates that humans can accurately identify only approximately 60% of robotic emotions, even in high-arousal contexts [1]. This limitation underscores the essential requirement for a development in emotional capacities in HRI systems.

The deployment of AI-driven systems in healthcare, education, and elderly care has generated considerable ethical concerns related to privacy, fairness, and transparency [2, 3]. For instance, recent studies identify significant challenges in preserving data privacy in AI-supported therapeutic devices [4] and in mitigating potential biases in clinical applications [5], where privacy and confidentiality represent some of the basic tenets of practice. Furthermore, healthcare environments necessitate the highest degree of emotional responsiveness and adherence to ethical standards. Ensuring that AI systems align with these ethical imperatives requires rigorous oversight, continuous evaluation, and the integration of human-centered safeguards.

The creation of emotion-aware and ethically responsible HRI systems requires thorough examination of various factors. Emotion recognition and adaptive responses can improve user engagement and trust [6, 7]. However, it is essential to be cautious of the risks of fostering false emotional attachments and potential manipulation [8]. The incorporation of human values in robot design and the establishment of transparent decision-making processes [9] are essential for ethical HRI systems. This systematic review examines the following research question: What is the design and implementation strategies for AI-driven systems in HRI that enhance emotional responsiveness and ensure ethical compliance in diverse socio-technical environments, specifically within healthcare, education, and organizational settings?

This review examines the relationship between emotional intelligence and ethical design principles in HRI, highlighting the necessity for detailed guidelines that promote effective emotional engagement while maintaining ethical compliance. This analysis emphasizes implementation contexts where both dimensions are essential, including healthcare, education, and elderly care settings [3, 10], while also addressing the wider implications for humancentered AI development. Current systems demonstrate potential, with certain models successfully representing emotions so that around 60% of them are correctly recognized by humans [1]. However, substantial opportunities exist for enhancement in both technical performance and ethical considerations. This research question examines the necessity of optimizing these systems to effectively meet human needs, while upholding ethical standards and fostering positive social impact in various application contexts.

Methodology

This comprehensive review synthesized findings from 40 studies examining emotion-aware and ethical AI in HRI. Given that systematic reviews aim to provide a comprehensive and synthesized understanding of a research area, the number of included studies is determined by methodological rigor, relevance, and saturation of key themes rather than sheer volume. Systematic reviews in niche fields, such as AI-driven HRI, often rely on a targeted selection of high-quality studies to provide meaningful insights without unnecessary redundancy. The chosen 40 studies represent a crosssection of empirical and theoretical contributions that sufficiently capture the critical research on emotional and ethical dimensions of HRI. Prior research supports this approach, with studies indicating that systematic reviews with sample sizes ranging from 30 to 50 papers can achieve thematic saturation and yield meaningful insights in specialized fields [11, 12]. The methodological approach reflected a systematic integration of diverse study types and analytical frameworks.

Study Selection and Characteristics

A structured search was performed to investigate the research question: What are the design and implementation strategies for AIdriven systems in HRI that enhance emotional responsiveness and ensure ethical compliance in diverse socio-technical environments, specifically within healthcare, education, and organizational settings? We conducted a search through more than 126 million academic papers within the Semantic Scholar corpus. The 100 papers most pertinent to the research question were retrieved. This included a variety of study types, predominantly comprising survey/systematic reviews (19 studies) and theoretical/conceptual reviews (14 studies), as analyzed in the literature by [10] and [3]. The remaining studies included three experimental studies, two case studies, one qualitative study, and one empirical study [13-16].

Screening and Refinement Process

A structured screening process was implemented to identify and evaluate relevant studies on emotional intelligence and ethical considerations in AI-driven HRI systems, ensuring a rigorous and comprehensive review. This analysis examines research published from 2004 to 2025, integrating theoretical frameworks and empirical studies across various domains [3, 10]. The inclusion criteria emphasized several critical aspects necessary for comprehending the interaction between AI-driven systems, human emotions, and ethical considerations in HRI. The first inclusion criterion was that the studies needed to investigate AI- driven systems, such as chatbots, humanoid robots, and social robots, that are specifically designed for human interaction. Second, they had to examine emotional and ethical factors, focusing on the impact of AI systems on human emotions, trust, empathy, and ethical responsibility. Thirdly, the application setting constituted another essential factor. Research focusing on healthcare, education, industrial, or organizational settings was emphasized to encompass the wide range of practical AI applications. Both empirical investigations-whether quantitative, qualitative, or mixed-methods - and systematic reviews were considered, in order to ensure the reliability and relevance of the findings.

In addition to technical discussions, selected studies were required to incorporate a human interaction component, extending beyond purely mechanical or algorithmic considerations to examine the social and psychological aspects of HRI. Human- centered design methodologies were emphasized, ensuring that AI systems are evaluated in accordance with user needs and ethical principles. Ninety-four studies were initially identified through the application of rigorous screening criteria. Following a comprehensive assessment, forty studies were chosen for in-depth analysis due to their methodological rigor, relevance, and contributions to the field. The selected sample size ensures thematic saturation and retains analytical depth. Previous systematic reviews in AI and HRI have employed comparable sample sizes to effectively balance essential insights while minimizing redundancy [17]. This structured approach facilitates a focused and high- quality analysis of the evolving landscape of emotionally intelligent and ethically responsible AI- driven HRI systems.

Process of Data Extraction

The data extraction process was developed to systematically capture and analyze essential information from the selected studies, following the previously described structured screening phase. The data extraction concentrated on a thorough analysis across eight fundamental dimensions of AI-driven HRI. This process facilitated a thorough understanding of emotional intelligence and ethical considerations in various implementation contexts, such as healthcare, education, and industrial applications [7, 9]. The extraction protocol was focused on identifying patterns and trends concerning socio-emotional attributes, ethical design principles, and their practical applications [18, 19]. The method enabled a thorough examination of particular challenges and success factors in HRI [14, 20]. The following attributes were extracted for each of the studies:

1) The studies were classified according to their methodological approaches, including empirical research, theoretical or conceptual reviews, survey/systematic reviews, case studies, experimental studies, and qualitative research. This classification elucidated the study’s framework and the validity of the research.

2) Attributes of AI Systems and Robotic Platforms – Technological aspects were documented, encompassing system classification (e.g., chatbot, humanoid robot, SAR), core functionalities (e.g., natural language processing, computer vision, machine learning), and specific AI technologies employed. Data was gathered from system design, technology descriptions, and procedural details to ensure precise technical representation.

3) Interaction Context and Field– The primary social or professional context for HRI was identified. These encompassed healthcare, education, industrial sectors, organizational contexts, rehabilitation, elderly care, and other specialized fields. In studies that investigated various settings, all pertinent domains were documented.

4) Participant Characteristics– Essential demographic and contextual information was recorded, including age range, gender distribution, and the presence of special populations (e.g., children with traumatic brain injuries, individuals with disabilities). The absence of demographic details resulted in the study being classified as having “insufficient demographic information.”

5) Emotional Intelligence and Socio-Emotional Attributes– An analysis of AI systems focused on specific emotional competencies, such as mechanisms of empathy, capabilities for emotion recognition, adaptive emotional responses, and strategies for building trust. The study was recorded as lacking any identified mechanisms of emotional intelligence if such factors were not explicitly addressed.

6) Ethical Considerations and Design Principles– The studies were assessed for ethical frameworks and principles, including privacy safeguards, consent mechanisms, algorithmic transparency, considerations of equity and inclusivity, and strategies for bias mitigation. If ethical principles were not addressed, the notation “no explicit ethical framework discussed” was documented.

7) Key Research Findings –The primary empirical findings, technological or methodological innovations, essential insights into HRI, and recognized challenges were derived from the results and discussion sections.

This process facilitated a structured and comprehensive analysis of the emotional and ethical dimensions in HRI through systematic extraction and categorization of relevant elements. The screening phase concentrated on selecting studies according to inclusion criteria, whereas data extraction enabled comparative analysis and thematic synthesis, revealing broader trends and practical implications across various research domains.

Analysis Framework

The study employed a systematic thematic analysis to examine emotional intelligence and ethical design principles in HRI. Studies were classified by areas of application (healthcare, education, elderly care, etc.), primary emphasis (emotional, ethical, or both), and implementation contexts (social robots, general HRI, and AI chatbots) [7, 9]. Figure 1 presents the framework utilized for the thematic analysis.

The analysis also structured studies based on their methodological approach, application domain, and focus. A comprehensive framework integrated thematic analysis of emotional intelligence, ethical design principles, human-centered implementation strategies, and sector-specific applications [18, 19].

Implementation Context Analysis

The methodology involved a thorough analysis of application contexts via structured comparative tables that examined challenges specific to the setting, factors contributing to success, and social impact measures [5, 20].

Methodological Limitations

This review acknowledges several methodological constraints. A notable limitation is the prevalence of review-based studies, with 33 of the 40 studies categorized as such, rather than consisting of primary empirical research. This imbalance restricts the accessibility of direct experimental results and empirical observations. Access to full-text versions of experimental and qualitative studies was, in some instances, restricted, with certain research papers partially available. These factors may affect the depth of analysis and the ability to draw comprehensive conclusions from empirical evidence.

Results

This section delineates our findings into three primary components. Initially, we outline the characteristics of the included studies, focusing on their methodological approaches and application domains. Then, we present a thematic analysis that emphasizes emotional intelligence in HRI and ethical design principles. Finally, we examine particular application contexts, focusing on healthcare, education, industry, and organizational settings [2]. Throughout these sections, we highlight key challenges, success factors, and social impacts identified across different implementation contexts.

Characteristics of Included Studies

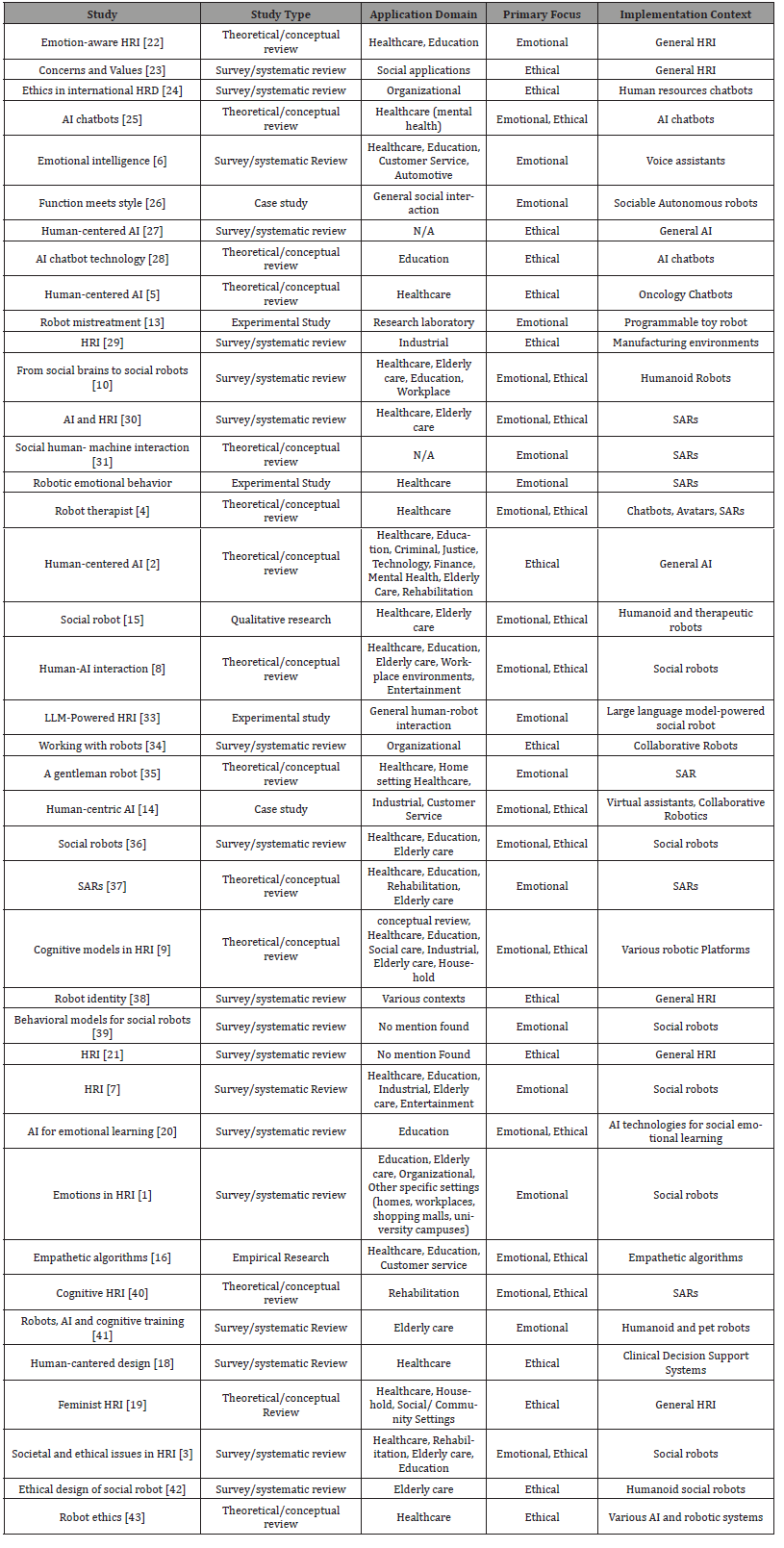

The included studies mostly focused on healthcare and educational applications while investigating ethical and emotional aspects of how people interact with robots. The prevalence of review-based methodologies indicates an active effort within the field to consolidate and synthesize knowledge across various domains and implementation contexts (Table 1).

Table 1: Characteristics of studies.

Overall, the studies demonstrated the following characteristics:

• Study types: The predominant categories were survey/ systematic reviews (19 studies) and theoretical/conceptual reviews (14 studies). Experimental studies (3 studies), case studies (2 studies), qualitative research (1 study), and empirical research (1 study) occurred with lower frequency.

• Application domains: The most researched domain was healthcare, with 22 studies, followed by education with 14 studies, and elderly care with 13 studies. Industrial and workplace settings (7), customer service (3), and rehabilitation (4) were also included. Four studies did not specify a particular domain.

• Primary focus: A total of 26 studies concentrated on emotional aspects, 27 on ethical aspects, and 13 studies investigated both dimensions.

• Implementation contexts: Social or socially assistive robots were the primary focus in 14 studies, while general HRI was addressed in 5 studies. AI chatbots, humanoid robots, and collaborative robots each appeared in 3 studies. Ten studies focused on various other contexts, such as voice assistants and clinical decision support systems.

Thematic Analysis

The thematic analysis investigates three key characteristics that affect AI-driven HRI:

1. Emotional intelligence in AI systems focuses on the identification of emotions, adaptive responses, the cultivation of trust, and interactions driven by empathy, all of which influence user engagement and social connections.

2. Ethical design principles include justice, privacy protection, transparency, and human-centered AI frameworks, which ensure the socially responsible functioning of AI systems.

3. Analysis of application contexts and implementation challenges, concentrating on the deployment of AI-HRI in healthcare, education, and industrial/organizational settings, while identifying domain-specific issues, success factors, and social implications.

The analysis integrates these characteristics to provide a comprehensive evaluation of current research, emphasizing both the technological advancements and ethical dilemmas inherent in AI- driven HRI today. Additionally, it highlights potential pathways for improving emotional reactivity, ensuring ethical governance of AI, and advancing human- centered AI applications in diverse sociotechnical settings.

Emotional Intelligence in HRI

The examined studies highlight several essential components of emotional intelligence in HRI. In AI- driven systems, emotional intelligence is essential for the development of more effective, engaging, and intuitive interactions between humans and robots. The research underscores key aspects such as emotion recognition, adaptive responses, trust, bonding, and empathy in HRI.

Recognizing and expressing emotions are essential factors in improving user interaction:

• Binwal [6] suggests that the capacity of voice assistants to identify emotions may enhance user engagement and satisfaction, leading to a more personalized and intuitive user experience.

• Stock-Homburg [1] found that humans can accurately identify around 60% of robotic emotions, showing improved accuracy particularly for high-arousal emotions, suggesting that certain emotional expressions are more effectively conveyed by robots than others. This finding highlight both the potential and limitations of current robotic emotion expression capabilities.

Adaptive Emotional Responses:

• Erol et al. [31] introduce a perception architecture centered on affection for robots, facilitating behavioral adaptation in reaction to identified human emotions. This approach aims to create more responsive and context-aware robots that can adjust their actions based on user affective states.

• Ficocelli et al. [32] demonstrate that assistive behavior motivated by emotions enhances the efficacy of interactions between humans and robots. By responding dynamically to user needs and emotions, robots can provide more meaningful and effective assistance in various domains, including healthcare, customer service, and education.

Trust and Bonding:

• Tian et al. [22] underscores the importance of emotion-aware HRI in cultivating trust. When robots are capable of recognizing and appropriately responding to emotions, they are perceived as more reliable and socially competent, which can strengthen human trust in AI systems.

• Hildt [8] suggests that robots designed to evoke emotions could enhance the formation of lasting relationships with users. Emotional connections with AI-driven entities may play a critical role in long-term adoption and user acceptance of robotic technologies.

Empathy in HRI:

• Park and Whang [7] demonstrate that empathic interactions contribute positively to liking, trust, and engagement in HRI. When robots exhibit empathy, they create a more comfortable and emotionally supportive environment for users, which can be particularly beneficial in therapeutic and caregiving contexts.

• Velagaleti [16] proposes that the integration of empathetic algorithms could enhance human emotional intelligence. By modeling and responding to emotions in a nuanced way, robots could potentially influence human emotional skills and social behaviors.

The reviewed studies indicate that incorporating emotional intelligence into AI-driven systems may enhance the quality and effectiveness of HRI; nonetheless, additional research is necessary Citation: Waleed M Al Nuwaiser*. From Design to Emotional and Ethical Enhancement in Human-Robot Interaction: A Systematic Page 7 of 11 Review. On Journ of Robotics & Autom. 3(4): 2025. OJRAT.MS.ID.000567. DOI: 10.33552/OJRAT.2025.03.000567 to confirm these findings. The observed accuracy in emotion recognition, around 60%, suggests significant opportunities for improvement in this area. Future advancements in emotion detection, adaptive learning, and empathetic AI will be crucial in bridging the gap between human emotional complexity and robotic responsiveness.

Ethical Design Principles

The thematic analysis also helped us delineate various core ethical principles and obstacles linked to the design and execution of AI-driven systems within the context of HRI. These principles span key areas such as fairness, privacy, transparency, humancentered design, and ethical frameworks.

Equity and Impartiality:

• Andreas [24] emphasizes the significance of fairness and nondiscrimination in AI-driven HR chatbots, emphasizing that AI systems used in recruitment and workplace management must be designed to mitigate bias and promote equitable outcomes for all users.

• Chow and Li [5] identify ethical challenges related to the deployment of large language models in oncology chatbots, particularly focusing on biases that arise from the reliance on predominantly Western datasets. These biases may result in disparities in healthcare recommendations and worsen existing inequities in medical treatment and patient care.

Confidentiality and Information Security:

• Fiske et al. [4] highlight concerns surrounding data privacy in AI-assisted psychotherapeutic tools, pointing out that sensitive patient information must be safeguarded to maintain confidentiality and prevent misuse.

• Garibay et al. [2] highlight privacy as a critical issue in the realm of human-centered AI.

• Wang et al. [18] emphasize the critical role of transparency in AI-driven Clinical Decision Support Systems.

• Minz [9] explores the role of Explainable AI (XAI) in improving the clarity of decision- making processes.

Design Focused on Human Needs:

• Giulio et al. [23] support the integration of human values into robot design, suggesting that ethical considerations should be embedded in AI development to align robotic behavior with societal norms and expectations.

• Winkle et al. [19] propose the incorporation of feminist principles in the design of HRI to address power dynamics and enhance inclusivity. This approach emphasizes the need for participatory design processes that consider the perspectives of marginalized communities.

Ethical Frameworks:

• Ostrowski et al. [21] analyze the ethical, equity, and justice aspects involved in HRI.

• Van Wynsberghe and Li [43] introduce the HRSI model, which serves as a framework for the ethical assessment of robots utilized in healthcare settings.

Overall, the reviewed studies highlight the complex ethical landscape surrounding AI-driven HRI. While advancements in AI and robotics offer promising opportunities for enhanced efficiency and user experience, they also raise significant ethical concerns that must be addressed through careful design, regulatory oversight, and continued interdisciplinary research. Future efforts should focus on refining ethical frameworks, improving transparency, and mitigating biases to ensure that AI- driven systems operate in a manner that is fair, secure, and aligned with human values.

Human-Centered Implementation

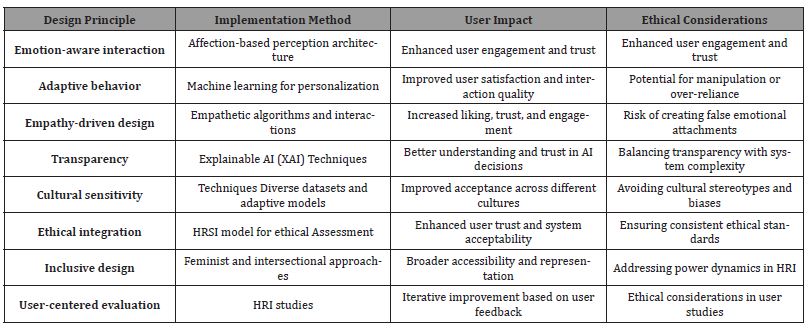

This section examines the fundamental design principles that improve human-centered HRI, including empathy-driven design, adaptive behavior, and emotional-aware interaction, all of which play a role in enhancing user happiness, trust, and engagement. The analysis delves into the significance of transparency, cultural sensitivity, and ethical integration within AI-powered systems, aiming to mitigate risks such as bias, emotional dependence, and privacy issues. Comprehending diverse implementation strategies facilitates the development of AI systems that are both technically efficient and congruent with human values concerning emotional and ethical considerations.

The analysis reveals eight unique design principles for social robots, each cited in an individual study (Table 2). Implementation methods varied, with adaptive approaches and empathy-related methods referenced in two studies each. User impacts varied, with trust emerging as the most frequently reported effect across four studies.

The diversity of design principles and implementation methods reflects numerous strategies for improving HRI. Important ethical considerations arising from these human-centered approaches include:

• Privacy concerns associated with the collection of emotional data.

• The risk of forming inaccurate emotional connections.

• The potential for manipulation or over-reliance on AI systems

• The necessity of balancing transparency with system complexity.

• The importance of examining power dynamics in HRI.

The implementation of these principles varies across different domains. For instance, healthcare prioritizes empathy and trustbuilding [5], while educational environments focus on adaptive learning and personalization [20].

Application Context Analysis

This section examines the intricate dynamics of AI-driven HRI, highlighting the incorporation of robots and AI assistants in healthcare for patient care and mental health support, in education for adaptive learning and social-emotional skill enhancement, and in industrial/organizational contexts to augment productivity and decision-making. Each of these fields poses distinct obstacles, such as privacy concerns in healthcare, algorithmic bias in educational AI, and equity in AI-driven job automation. Additionally, the factors that facilitate effective AI implementation, such as empathetic interactions, adaptive learning capabilities, and transparent decision-making, are analyzed to highlight best practices for improving AI’s role in human- centered applications.

Table 2: Design principles in HRI.

This section aims to provide a comprehensive understanding of the societal impact of AI-powered HRI systems by analyzing many application situations, emphasizing their potential benefits and the associated ethical issues. This analysis seeks to identify critical techniques for overcoming implementation obstacles, ensuring that AI technologies are equitable, inclusive, and aligned with human requirements across many professional and social situations.

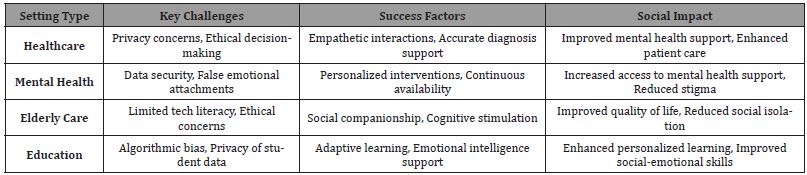

Healthcare and Educational Settings

The results from the analyzed studies (Table 3) reveal that privacy and data security concerns were identified in 75% of the settings. Ethical concerns were identified in half of the analyzed settings. Empathy and emotional intelligence, as well as personalization and adaptive learning, were identified as critical success factors in two of the four analyzed settings. Enhanced mental health support was recognized as having a significant social impact in 50% of the analyzed settings. Furthermore, AI-driven systems in healthcare and education have the potential to improve service delivery and user experiences, despite ongoing challenges. While these settings face comparable challenges, particularly in terms of privacy and ethics, their success factors and social impacts are predominantly contingent upon context.

Table 3: Analysis of AI-driven applications in Healthcare and Education.

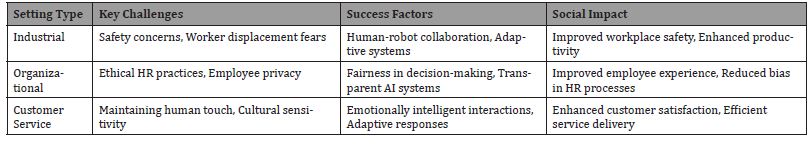

Industrial and Organizational Applications

When it comes to industrial and organizational applications, there were six unique key challenges identified across the three types of settings, with each challenge noted once (Table 4). Adaptive systems have been recognized as a crucial determinant of success in both industrial and customer service environments. Each setting type demonstrated unique social impacts, with no overlap between the settings. Insights from the application context analysis reveal that AI-driven systems face unique challenges and opportunities in different contexts. Adaptive and emotionally intelligent systems hold significance across multiple contexts. Ethical considerations, including privacy and fairness, are critical in all application contexts. The social implications of AI- driven systems depend on the specific application domain.

Table 4: Industrial and Organizational Applications.

Conclusion and Future Directions

The analysis of emotion-aware and ethical AI in HRI produces several clear conclusions. The healthcare application domain is identified as the primary area, with 22 studies, followed by education with 14 studies and elderly care with 13 studies, indicating substantial potential for AI-driven systems in these sectors. The effective implementation of these systems depends on context, with healthcare emphasizing empathy and trust-building [5], whereas educational environments prioritize adaptive learning and personalization [20].

The implementation of emotional intelligence reveals that current emotion expression systems achieve moderate success, with accuracy rates approximately 60% for high-arousal emotions [1]. Emotion-aware systems improve user engagement and satisfaction by adjusting their responses according to emotional cues [31]. Furthermore, the exploration of empathetic interactions, which are essential for cultivating deeper connections between users and AI systems, is presented in [7]. Additionally, the study in [6] explores mechanisms for establishing trust and ensuring contextsensitive responses, emphasizing the importance of personalized and adaptive AI-driven interactions.

Fiske et al. [4] emphasize the efficacy of incorporating ethical principles into AI systems via mechanisms aimed at protecting privacy. Furthermore, transparent methodologies in AI decisionmaking are essential for cultivating user trust. Maintaining equity and impartiality in algorithms enhances the ethical framework of AI interactions, thereby fostering fairness and accountability in system design. Recent studies identify key areas necessitating additional research to improve AI-driven HRI. A primary objective is to enhance the accuracy of emotion recognition and expression beyond their existing constraints [1]. Furthermore, enhancing adaptive response mechanisms to address more intricate emotional cues is crucial for promoting more authentic and significant interactions. The development of cross-cultural emotional intelligence competencies is essential, enabling AI systems to accurately recognize and respond to varied emotional expressions. Additionally, algorithms should be developed to demonstrate enhanced robustness and empathy, allowing for effective adaptation across diverse contexts [16].

The establishment of standardized ethical assessment protocols is essential for the development of comprehensive ethical frameworks [21]. Implementing stringent privacy protection measures will safeguard user data and trust, while protocols must be introduced to mitigate the risks associated with false emotional attachments. Improving transparency in AI decision-making frameworks is essential for ensuring accountability and promoting ethical AI deployment. Furthermore, research initiatives ought to broaden their scope beyond the predominant emphasis on healthcare and education to investigate underrepresented areas. Examining the long-term effects of interactions with emotional AI will yield insights into its influence on human psychology and behavior. Comparative studies of HRI in various cultural contexts will contribute to the development of globally adaptive AI models. Furthermore, analyzing the impact of emotional AI on vulnerable populations will be essential for promoting fair and advantageous AI implementations. Research aimed at advancing AI-driven systems should prioritize the development of sophisticated emotion recognition technologies, culturally adaptive interaction models, standardized ethical guidelines, and a comprehensive understanding of the long-term implications of emotional bonding between humans and robots.

Future research should improve methodological rigor by increasing the quantity of experimental and empirical investigations, conducting longitudinal studies, expanding crosscultural analyses, and utilizing more stringent evaluation methods. This area presents considerable opportunities for enhancing HRI in various sectors, especially healthcare, education, and elder care. Its effectiveness is contingent upon the resolution of existing technical and ethical challenges. The incorporation of feminist design principles in conjunction with ethical assessment models indicates an increasing acknowledgment of the necessity for inclusive and ethically responsible development methodologies. Although current technologies demonstrate significant potential, considerable efforts remain necessary to improve their technical capabilities and ethical frameworks. The field is advancing towards a more human-centered and ethically responsible approach; however, ongoing research and development are crucial to fully leverage AI’s potential in meeting human needs across various applications.

Acknowledgement

None.

Conflict of interest

No conflict of interest.

References

- R Stock Homburg (2021) Survey of Emotions in Human- Robot Interactions: Perspectives from Robotic Psychology on 20 Years of Research, Int J of Soc Robotics 14(2): 389-411.

- O Ozmen Garibay (2023) Six Human-Centered Artificial Intelligence Grand Challenges, International Journal of Human-Computer Interaction 39(3): 391-437.

- R Wullenkord, F Eyssel (2020) Societal and Ethical Issues in HRI, Curr Robot Rep 1(3): 85-96.

- A Fiske, P Henningsen, A Buyx (2019) Your Robot Therapist Will See You Now: Ethical Implications of Embodie d Artificial Intelligence in Psychiatry, Psychology, and Psychotherapy, J Med Internet Res 21(5): e13216

- JCL Chow, K Li (2024) Ethical Considerations in Human-Centered AI: Advancing Oncology Chatbots Through Large Language Models, JMIR Bioinform Biotech 5: e64406.

- Ankur Binwal (2024) Emotional Intelligence in Voice Assistants: Advancing Human-AI Intera ction, Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol 10(5): 513-523

- S Park, M Whang (2022) Empathy in Human-Robot Interaction: Designing for Social Robots, IJERPH 19(3): 1889.

- E Hildt (2021) What Sort of Robots Do We Want to Interact With? Reflecting on the Hum a Side of Human-Artificial Intelligence Interaction, Front. Comput. Sci vol. 3.

- IMM (2024) Exploring Cognitive Models in Human-Robot Interaction, IJFMR 6(5).

- ES Cross, R Hortensius, A Wykowska (2019) From social brains to social robots: applying neurocognitive insights to human-robot interaction, Phil. Trans. R. Soc. B 374(1771): 20180024.

- AP Siddaway, AM Wood, LV Hedges (2019) How to do a systematic review: a best practice guide for conducting and reporting narrative reviews, meta-analyses, and meta-syntheses, Annual review of psychology 70(1): 747-770.

- A Liberati (2009) The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration, Bmj vol. 339.

- J Connolly (2020) Prompting Prosocial Human Interventions in Response to Robot Mistreatm ent, in Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, ACM, pp. 211-220

- EM Lase F Nkosi (2023) Human-Centric AI: Understanding and Enhancing Collaboration between Hu mans and Intelligent Systems, Algrasy 1(1): 33-40.

- DH Garcia, PG Esteban, HR Lee, M Romeo, E Senft, et al. (2019) Social Robots in Therapy and Care, in 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), IEEE.

- Sesha Bhargavi Velagaleti (2024) Empathetic Algorithms: The Role of AI in Understanding and Enhancing Human Emotional Intelligence, jes 20(3s): 2051-2060.

- MJ Page (2021) The PRISMA 2020 statement: an updated guideline for reporting systematic reviews, bmj vol. 372.

- L Wang (2023) Human-centered design and evaluation of AI-empowered clinical decision support systems: a systematic review, Front. Comput. Sci vol. 5.

- K Winkle (2023) Feminist Human-Robot Interaction, in Proceedings of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, ACM, pp. 72-82.

- SS Sethi, K Jain (2024) AI technologies for social emotional learning: recent research and future directions, JRIT 17(2): 213-225.

- AK Ostrowski (2022) Ethics, Equity, & Justice in Human-Robot Interaction: A Review and Future Directions, in 2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO- MAN), IEEE, pp. 969-976.

- L Tian, S Oviatt, M Muszynski, B Chamberlain, J Healey, et al. (2022) Emotion-aware human-robot interaction and social robots, Applied Affective Computing.

- AA Giulio, B Tony, S Micol (2025) Concerns and Values in Human-Robot Interactions: A Focus on Social Robotics.

- NB Andreas (2024) Ethics in international HRD: examining conversational AI and HR chatbots, SHR 23(3): 121-125.

- L Balcombe (2023) AI Chatbots in Digital Mental Health, Informatics 10(4): 82.

- C Breazeal (2004) Function Meets Style: Insights from Emotion Theory Applied to HRI, IEEE Trans. Syst., Man, Cybern. C 34(2): 187-194.

- T Capel, M Brereton (2023) What is Human-Centered about Human-Centered AI? A Map of the Research Landscape, in Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, ACM, pp. 1-23

- SA Chauncey, HP McKenna (2023) A framework and exemplars for ethical and responsible use of AI Chatbot technology to support teaching and learning, Computers and Education: Artificial Intelligence 5: 100182.

- E Coronado, T Kiyokawa, GAG Ricardez, IG Ramirez-Alpizar, G Venture, et al. (2022) Evaluating quality in human-robot interaction: A systematic search and classification of performance and human-centered factors, measures a d metrics towards an industry 5.0, Journal of Manufacturing Systems 63: 392-410.

- Alexander Obaigbena (2024) AI and human-robot interaction: A review of recent advances and challenges, GSC Adv. Res. Rev 18(2): 321-330.

- BA Erol, A Majumdar, P Benavidez, P Rad, KKR Choo, et al. (2020) Toward Artificial Emotional Intelligence for Cooperative Social Human-Machine Interaction, IEEE Trans. Comput. Soc. Syst 7(1): 234-246.

- M Ficocelli, J Terao, G Nejat (2016) Promoting Interactions Between Humans and Robots Using Robotic Emotion al Behavior, IEEE Trans. Cybern 46(12): 2911-2923.

- CY Kim, CP Lee, B Mutlu (2024) Understanding Large-Language Model (LLM)-powered Human-Robot Interaction, in Proceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction, ACM, 371-380

- S Kim (2022) Working with Robots: Human Resource Development Considerations in Huma n–Robot Interaction, Human Resource Development Review 21(1): 48-74.

- R Kittmann, T Fröhlich, J Schäfer, U Reiser, F Weißhardt, et al. (2015) Let me Introduce Myself: I am Care-O-bot 4, a Gentleman Robot, in Mensch und Computer 2015 - Tagungsband, DE GRUYTER, pp. 223-232.

- V Lim, M Rooksby, ES Cross (2020) Social Robots on a Global Stage: Establishing a Role for Culture Durin g Human-Robot Interaction, Int J of Soc Robotics 13(6): 1307-1333.

- M Matarić (2023) Socially Assistive Robotics: Methods and Implications for the Future of Work and Care, in Frontiers in Artificial Intelligence and Applications, IOS Press.

- L Miranda, G Castellano, K Winkle (2023) Examining the State of Robot Identity, in Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, ACM, pp. 658-662.

- O Nocentini, L Fiorini, G Acerbi, A Sorrentino, G Mancioppi, et al. (2019) A Survey of Behavioral Models for Social Robots, Robotics 8(3): 54

- Fosch Villaronga Eduard, Barco Alex, Özcan Beste, Shukla Jainendra (2016) An Interdisciplinary Approach to Improving Cognitive Human-Robot Inter action – A Novel Emotion-Based Model, in Frontiers in Artificial Intelligence and Applications, IOS Press.

- AA Vogan, F Alnajjar, M Gochoo, S Khalid (2020) Robots, AI, and Cognitive Training in an Era of Mass Age-Related Cognitive Decline: A Systematic Review, IEEE Access 8: 18284-18304.

- S Yuan, S Coghlan, R Lederman, J Waycott (2023) Ethical Design of Social Robots in Aged Care: A Literature Review Using an Ethics of Care Perspective, Int J of Soc Robotics 15(9-10): 1637-1654.

- A van Wynsberghe, S Li (2019) <p>A paradigm shifts for robot ethics: from HRI to human-robot-system interaction (HRSI)</p>, MB, 9: 11-21.

-

Waleed M Al Nuwaiser*. From Design to Emotional and Ethical Enhancement in Human-Robot Interaction: A Systematic Page 9 of 11 Review. On Journ of Robotics & Autom. 3(4): 2025. OJRAT.MS.ID.000567.

-

Artificial Intelligence (AI); Human-Robot Interaction (HRI); Socially Assistive Robots (SARs); AI-driven systems; Chatbots; Computer Vision; Machine Learning

-

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.