Research Article

Research Article

MindScan And MindQ: Digital Self-Screening Tests for Early Alzheimer’s Disease

Sierra T Pence1, Yuhan Liu2, Alexandra R Linares1, Caroline A Hellegers1*, Prahlad Krishnan3, K Ranga Rama Krishnan4 and P Murali Doraiswamy1,5

1Duke University School of Medicine

2Stanford University School of Medicine

3Psyber

4Rush University School of Medicine

5Duke Institute for Brain Sciences

Caroline A Hellegers, Duke University School of Medicine, USA.

Received Date:April 06, 2023; Published Date:April 12, 2023

Abstract

Background: The increasing use of web and smart phone tools by the elderly offers the opportunity for the development and scaling of

digital tools for home-based, self-assessment of cognitive performance. Such tools may aid in the early detection of Alzheimer’s disease (AD) and

complement existing clinic based neuropsychological, biomarker and imaging assessments.

Methods: This was a pilot, single center, evaluation of the utility of two self-administered, digital tools, MindScan and MindQ, to discriminate

subjects with mild AD or mild cognitive impairment (MCI) from those with normal cognition. The MindScan is an objective performance test

completed by the subject online in an unsupervised fashion. MindQ is an online informant questionnaire about the subject’s functioning.

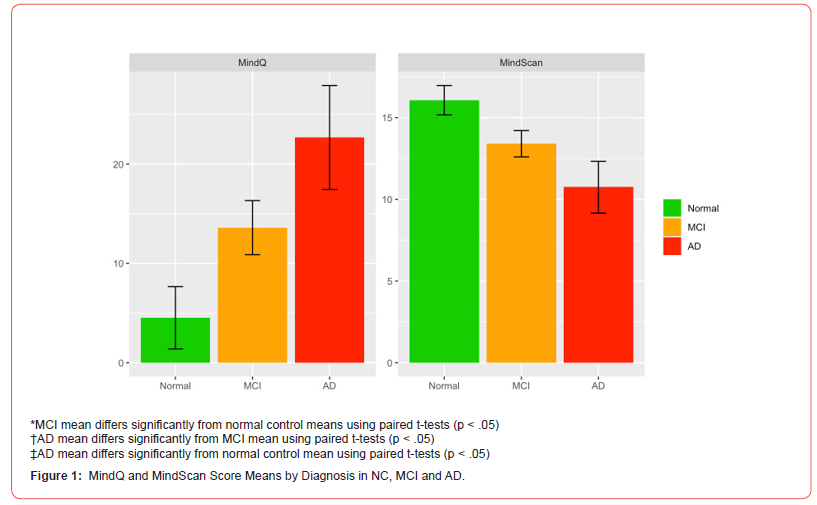

Results: MindScan scores differed significantly between NC [16.07 (CI 90%: 15.17-16.97)], MCI patients [13.41 (CI: 12.60-14.21)] and AD

patients [10.75 (CI: 9.16-12.33)] (p<.001). The MindQ also differed significantly between NC [4.52 (CI 90%: 1.39-7.66)], MCI patients [13.60 (CI:

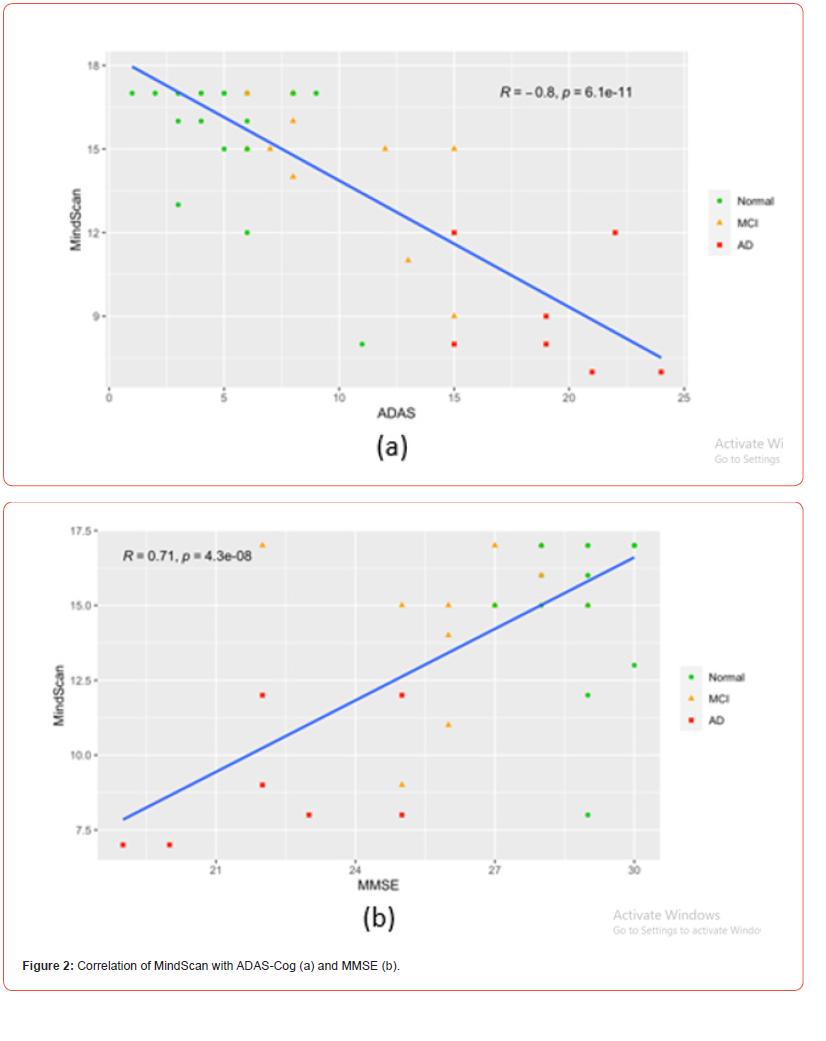

10.88-16.33)], and AD patients [22.69 (CI: 17.45-27.92)] (p<.001). The MindScan was significantly inversely correlated to two commonly used tests,

the ADAS (R=-0.80, p<.001) and the MMSE (R=0.71, p<.001). The accuracy of the MindQ (AUC=0.89) was similar to that of the FAQ (AUC=0.91).

Conclusions: We report the initial successful development of two rapid, unsupervised, self-administered, online tests, aimed at home-based

screening for early AD and MCI. Further validation in a larger, more diverse, sample of patients is planned. Ultimately, combining such home based,

digital cognitive self-assessments with blood-based biomarker tests may facilitate large-scale screening of MCI which in-turn could benefit both

consumers and public health.

Introduction

Worldwide an estimated 50 million people live with Alzheimer’s disease (AD) with these numbers expected to triple in coming decades. In addition, tens of millions of elderly people are at risk for AD due to the presence of mild cognitive impairment (MCI). Early and accurate detection of memory loss is a public health priority and critical to the development of strategies to slow disease progression and maintain independent functioning. Clinical neuropsychological testing combined with neuropsychiatric examination has formed the cornerstone of clinical diagnosis of AD for decades. Recently developed PET scans and cerebrospinal fluid biomarkers allow for pathological subclassification of clinically diagnosed AD and MCI patients but they are expensive, invasive and are not suitable for population level screening.

The rapid growth of mobile and digital computing technologies offers great promise both for early detection of AD/MCI and for selfmonitoring. Further, digital self-test tools offer many advantages over traditional neuropsychological tests. These include patient centric advantages such as convenience, privacy, and 24/7 access as well as features that promote scale of reach, including automated scoring and cloud-based archiving. Many cognitive tests are used to diagnosis memory but long administration times, insensitivity to early changes in highly educated individuals, and the need for an individual to administer tests limit the use of these screening tools clinically [1]. At least 40 brief traditional paper and pencil cognitive tests have been developed and tested thus far [2], but many still fall short. Minimizing administrative burden through computerized testing or self-administration has shown promise in recent years, with the written self-administered Test Your Memory (TYM) screening tool accurately differentiating diagnostic groups, even in the clinical setting [3,4].

The Cognitive Function Test, an online self-administered test, showed correlation with MMSE scores in cognitively normal older individuals [5]. Many other online screening tools have shown correlations with various other memory tests in community populations [6,8]. The use of the Cog State Brief Battery, both online and self-administered, was validated in patients who have suffered a Traumatic Brain Injury or are living with Schizophrenia or AIDS Dementia Complex [9]. Although many screening tests show high correlations with other neurocognitive tests, there is a need for additional research in MCI. Moreover, there is a lack of well validated, computerized measurements of Independent Activities of Daily Living (IADLS) even though informant rated scales have been shown to assist in a more accurate diagnosis when combined with neuropsychological testing than when either measure is used alone [10].

Our online self-administered test, MindScan, provides an easy to use and accessible tool to alert those showing signs of mild cognitive impairment (MCI) as well as to assure cognitively normal individuals that their memory is stable. MindScan combines questions regarding orientation, spatial skills, and short-term memory to test cognitive function. The MindQ, a matching online informant completed questionnaire about IADL’s, is based on a paper version that was successfully used to identify clinical subpopulations [11]. The aim of the present study was to evaluate the effectiveness of the self-administered online MindScan and MindQ tests in identifying MCI and early AD.

Methods

Participants and IRB Approval

Forty-five volunteers were recruited through referrals and/or advertisements following approval from the duke IRB and written informed consent from subject and/or legal representative as appropriate. Participants were classified as either normal cognition (NC), amnestic mild cognitive impairment (MCI), or mild probable AD dementia (AD) based on standard criteria. Participants were between the ages of 50-90 and were assigned a diagnosis based on subject and informant histories, neurocognitive testing scores, physical exams, and physician judgment. Each subject had to have a reliable informant who had regular contact with the subject.

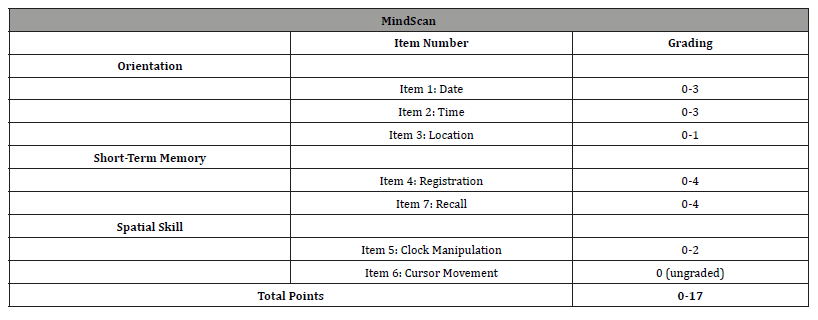

MindScan, MMSE and ADAS-Cog-11 Tests

The MindScan test consists of 6 graded items - on orientation (date, time, and location), memory (the registration and recall of 4 words), and the assessment of spatial function using a manual clock. The test items summary and scoring are found in [Table 1]. All responses were recorded with a computer mouse click or by using the keyboard to type answers. All participants received the same instructions and were provided with the same PC, mouse, and keyboard to complete the test. A staff member provided a basic introduction to the test following which the subject was left in a quiet room to self-administer and complete the test alone. The MindScan was completed in approximately 15 minutes.

Table 1:MindScan Items and Scoring.

Following this, all subjects were administered the Mini Mental State Exam (MMSE) and the Alzheimer’s Disease Assessment Scale Cognitive Subscale (ADAS-Cog-11) by a trained staff member.

MindScan Test-Retest Reproducibility

The participants were then asked to repeat the MindScan at home on their personal computers 5 more times (once a day) over the following week to analyze test reproducibility and learning curves within subjects. Three (3) CN, 5 MCI, and 2 AD were unable to complete the testing at home due to technical difficulties.

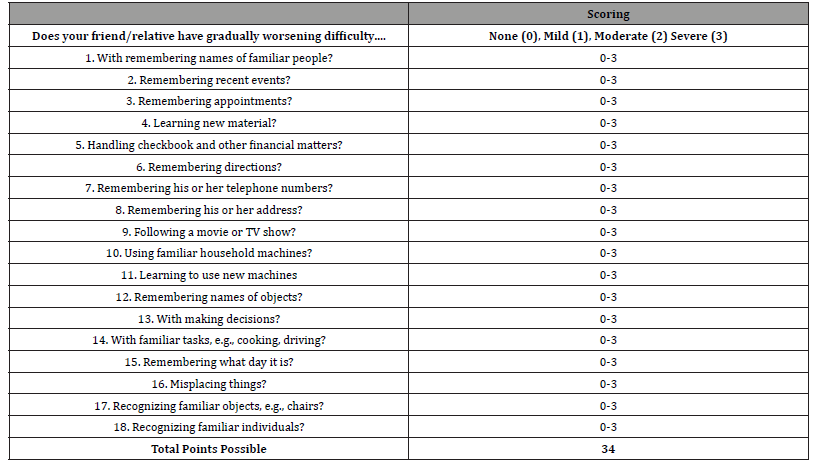

MindQ Test

The MindQ was completed by the subject’s informant either inperson on the staff computer during the participant’s visit or within 30 days of the visit on a personal computer. The MindQ consists of 18 items and is based on the Brief Cognitive Scale [11], which uses simple wording and focuses on whether a patient has had worsening difficulty in everyday activities such as remembering names or misplacing things. Informants were either asked to complete the online questionnaire in-person or contacted via phone and sent directions through email on how to complete the online questionnaire at home describing the items and scoring of the MindQ [Table 2].

Statistical analyses

Descriptive statistics were computed for demographic data and the cognitive test results were analyzed by ANOVA for associations with gender, age, and education. Significance was set at p < 0.05. Results were analyzed for Pearson’s correlations between the novel pilot test and the established memory tests. A correlation of 0.40 and p < 0.01 was considered sufficient to support concurrent validity. Individual items as well as groups of items in the same domain were analyzed using the Kruskal-Wallis Rank Sum Tests to discover which items of the pilot tests were most significant.

Results

Demographics

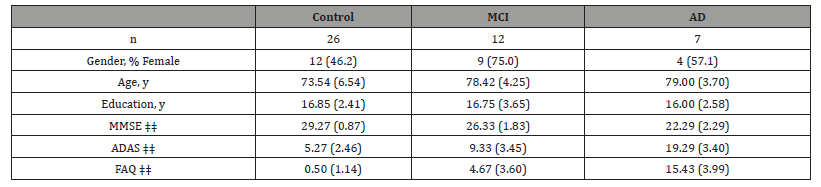

[Table 3] displays baseline characteristics for the cognitively normal (n=26), MCI (n=12) and AD (n=7) subjects included in this study. Age was significantly different between the groups (p=.015) with MCI (p=.009) and AD (p=0.01) subjects being older than the cognitively normal group. No significant difference in education or gender was present between groups. As expected, one-way ANOVAs showed that each group differed significantly in MMSE, ADAS, and FAQ scores (p<.001).

Table 2:MindQ Items and Scoring.

Table 3:Demographic and Clinical Assessments by Diagnostic Group [Mean (SD)].

*MCI mean differs significantly from normal control means using paired t-tests (p < .05)

†AD mean differs significantly from MCI mean using paired t-tests (p < .05)

‡AD mean differs significantly from normal control mean using paired t-tests (p < .05)

‡‡ groups differ significantly using one way ANOVA (p < .001)

Performance of MindScan Test

After controlling for age, MindScan scores differed significantly between NC (16.07, CI 90%: 15.17-16.97) and the cognitively impaired group (MCI patients 13.41, CI: 12.60-14.21, and AD patients 10.75, CI: 9.16-12.33). ANOVAs showed the MindScan significantly differentiated the control and impaired groups (p<.001). [Figure 1] shows MindScan scores by diagnosis. AD and MCI groups performed significantly worse than NC in both the orientation and short-term recall domains (p<.001). The Spatial Skill domain was also significantly different between groups (p=0.03). The score of the short-term recall domain was highly correlated with diagnosis (Cramer’s V=0.71), followed by the orientation domain (V=0.54) and Spatial Skill domain (V=0.28). Analyzing each question individually, the orientation question on “date” was the most correlated with diagnosis (Cramer’s V=0.75) followed by the “recall” score (V=0.59).

Performance of the MindQ Test

After controlling for age, the MindQ differed significantly between NC [4.52 (CI 90%: 1.39-7.66)], MCI patients [13.60 (CI: 10.88-16.33)], and AD patients [22.69 (CI: 17.45-27.92)]. ANOVAs showed the MindQ significantly differentiated all 3 diagnostic groups from one another (p<.001). Item 15 (Does your friend/ relative have gradually worsening difficulty remembering what day it is?) showed the highest correlation with diagnosis (V=0.572).

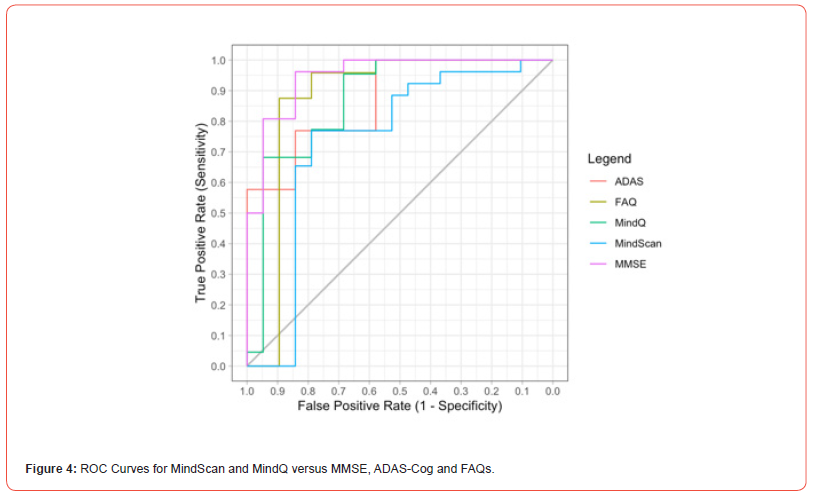

Accuracy of MindScan Compared to MMSE, FAQ and ADAS-Cog

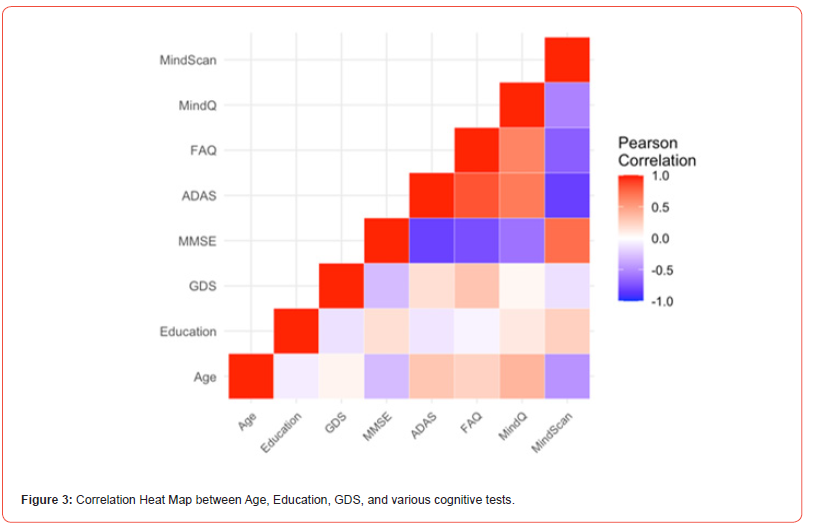

The MindScan was found to be significantly correlated with the ADAS-Cog (R=-0.80, p<.001) [Figures 2,3] and the MMSE (R=0.71, p<.001). Figure 3 shows a heatmap of correlations among these tests as well as FAQ, and Geriatric Depression Scale (GDS). The ROC curves [Figure 4] illustrate MindScan and MindQ compared to other common cognitive tests and compare the ability to distinguish cognitively normal from patient groups (MCI + AD dementia). The ROC analysis demonstrated that the AUC was 0.813 (CI 90%: 0.985-0.941), 0.967 (CI 90%: 0.923-1.000), and 0.900 (CI 90%: 0.816-0.983) measured by the MindScan, MMSE, and ADAS tests, respectively. The reported AUC was 0.892 (CI 90%: 0.790-0.994) for the MindQ and 0.919 (CI 90%: 0.828-1.000) by the FAQ.

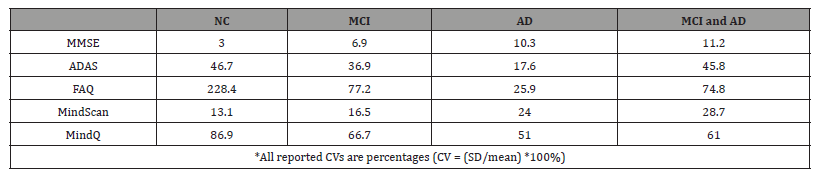

Variability of Tests

As shown in [Table 4], the MMSE showed the lowest variability among all participants, with a coefficient of variability (CV) of 10.7%, compared with 67.2% for the ADAS and 22.5% for the MindScan. The FAQ and MindQ both demonstrated higher variability, with CVs of 145.1% and 102.2%, respectively. Normal controls demonstrated the lowest variability for the MMSE and MindScan and the highest variability for the ADAS, FAQ, and MindQ, compared with MCI and AD groups individually. Similarly, normal controls showed lower variability for the MMSE and MindScan compared with cognitively impaired subjects (MCI and AD subjects combined), higher variability for the FAQ and MindQ, and comparable variability for the ADAS.

Table 4:Coefficient of Variability (CV) for MindScan, MindQ versus MMSE, ADAS-Cog and FAQ..

Discussion

Our study demonstrates that the computerized MindScan has the ability to differentiate normal aging from those experiencing MCI or mild dementia. Although the ROC curve is less than that of the MMSE, MindScan is self-administered, completed in under 15 minutes, and can be completed at home. This shows promise of the MindScan as an at-home screening tool or as a “preventive” cognitive screen that could be quickly done prior to an individual’s annual wellness visit in a general physician’s office.

MindQ was also able to differentiate normal aging from atrisk groups with a relatively high accuracy. The MindQ was more reliable than the commonly administered FAQ presumably since it has more items better tailored to MCI and AD; additionally, the MindQ can be accessed and completed at home on an individual’s personal computer. This process could be implemented into healthcare checkups and provide IADL information to individuals, family and physicians.

The MindScan orientation items showed the highest correlation with diagnosis and the MindQ item regarding date also showed the highest correlation with diagnosis, therefore orientation appears to be an easy and reliable indicator when analyzing the memory of elderly individuals. Focusing on orientation items in clinics and also in the creation of further tools may be beneficial.

There are some strengths and limitations to our study. The main strength of the study is the relatively high accuracy (>80%) and reliability rates of MindScan and MindQ for detecting cognitive impairment. Further, the majority (83%) of subjects were able to self-administer the MindScan both in the clinic and at home. One weakness of our study is the small sample size and lack of racial and educational diversity in our population, which is not representative of the general population; hence the associations found should be viewed as preliminary and warranting confirmation in other larger studies. The results reported for MCI subjects had relatively larger standard deviations, consistent with the heterogenous nature of MCI. Approximately 17% of subjects (3 CN, 3 MCI, 2 AD) had technical difficulties with completing their home-based testing – suggesting that individuals without access to a computer or unfamiliar with technology may need assistance to access these tools. With future generations, these online tests may become more useful as the general population gains more experience using computers. Our findings should therefore be viewed in that regard.

In summary, we report the initial development and validation of two digital screening tests for cognitive and IADL impairment. More definitive clinical trials to gain regulatory approval are warranted. Such tests could also be combined with cloud-based, voice assisted generative AI and advanced automatic analytics, to provide test instructions, automate scoring, counsel patients, track longitudinal patterns, and generate reports for physicians. Due to their low cost and scalability, such developments hold great promise for the early detection of MCI/AD in the home or in primary care settings [12].

Disclosure/Acknowledgments

The study was conducted using internal Duke funding. Holmusk provided technical platform support but had no role in study design or analyses. PMD has received grants, advisory/ board fees and/or stock from several companies for other projects; PMD was an advisor to Holmusk; PMD is a co-inventor on several patents not related to the present study. PK is CEO of Psyber, Inc. and Quilt Technologies, Inc. and co-created MindScan while previously working at Holmusk. KRRK is Professor of Psychiatry at Rush University and Emeritus Professor at Duke University School of Medicine, chairman of Amyriad and board member/ advisor to the Ministry of Health, Ministry of Trade/Industry and Ministry of Education, Singapore, board member of Community Health Systems and an advisor to Sage Therapeutics, Demetrix, Verily, Psyprotix, and Chymia LLC. KRRK has multiple patents in the fields of neuroscience and digital health. KRRK was an intellectual property holder for Mindlinc which Holmusk acquired from Duke. Other authors report no disclosures.

Conflict of interest

Please see disclosures.

References

- Zygouris S, Tsolaki M (2015) Computerized Cognitive Testing for Older Adults: A Review. American Journal of Alzheimer's Disease and Other Dementias 30(1): 13-28.

- Ozer S, Young J, Champ C, Burke M (2016) A systematic review of the diagnostic test accuracy of brief cognitive tests to detect amnestic mild cognitive impairment. International Journal of Geriatric Psychiatry 31(11): 1139-1150.

- Hancock P, Larner AJ (2011) Test Your Memory test: diagnostic utility in a memory clinic population. International journal of geriatric psychiatry 26(9): 976-980.

- Yan HD, MT Yi Ling, Kelly ETN, Aijing W, EY Shuang Wan, et al. (2019) The Clinical Utility of the TYM and RBANS in a One-Stop Memory Clinic in Singapore: A Pilot Study. Journal of Geriatric Psychiatry and Neurology 32(2): 68-73.

- Trustram Eve C, de Jager CA (2014). Piloting and validation of a novel self-administered online cognitive screening tool in normal older persons: the Cognitive Function Test. International Journal of Geriatric Psychiatry 29(2): 198-206.

- Robbins TW, James M, Owen AM, Sahakian BJ, McInnes L, et al. (1994) Cambridge neuropsychological test automated battery (CANTAB): A factor analytic study of a large sample of normal elderly volunteers. Dementia (Basel, Switzerland) 5(5): 266-281.

- Tornatore JB, Hill E, Laboff JA, McGann ME (2005) Self-Administered Screening for Mild Cognitive Impairment: Initial Validation of a Computerized Test Battery. The Journal of Neuropsychiatry and Clinical Neurosciences 17(1): 98-105.

- Aalbers T, Baars MAE, Olde Rikkert MGM, Kessels RPC (2013) Puzzling with online games (BAM-COG): Reliability, validity, and feasibility of an online self-monitor for cognitive performance in aging adults. Journal of Medical Internet Research 15(12): e270.

- P Maruff, E Thomas, L Cysique, B Brew, A Collie, et al. (2009) Validity of the CogState Brief Battery: Relationship to Standardized Tests and Sensitivity to Cognitive Impairment in Mild Traumatic Brain Injury, Schizophrenia, and AIDS Dementia Complex. Archives of Clinical Neuropsychology 24(2): 165-178.

- Mackinnon A, Mulligan R (1998) Combining Cognitive Testing and Informant Report to Increase Accuracy in Screening for Dementia. The American Journal of Psychiatry 155(11): 1529-1535.

- Krishnan KR, Levy RM, Wagner HR, Gersing K, Doraiswamy PM, et al. (2001) Informant-rated cognitive symptoms in normal aging, mild cognitive impairment, and dementia. Initial development of an informant-rated screen (Brief Cognitive Scale) for mild cognitive impairment and dementia. Psychopharmacology Bulletin 35(3): 79-88.

- Bodner K, Goldberg T, Devanand DP, Doraiswamy PM (2020) Advancing computerized cognitive training for MCI and Alzheimer’s in a pandemic and post-pandemic world. Front Psychiatry: 11.

-

Sierra T Pence, Yuhan Liu, Alexandra R Linares, Caroline A Hellegers, Prahlad Krishnan, K Ranga Rama Krishnan and P Murali Doraiswamy. MindScan And MindQ: Digital Self-Screening Tests for Early Alzheimer’s Disease. Glob J Aging Geriatr Res. 2(3): 2023. GJAGR. MS.ID.000536.

-

MindScan and MindQ, Neuropsychological, Blood-based biomarker, Alzheimer’s disease, Preventive

-

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.