Mini Review

Mini Review

A Study of Human Computation Applications

Man-Ching Yuen*

Department of Computer Science and Engineering, The Chinese University of Hong Kong, Hong Kong

Man-Ching Yuen, Department of Computer Science and Engineering, The Chinese University of Hong Kong, Hong Kong.

Received Date: October 01, 2018; Published Date: Novmber, 01 2018

Abstract

Human computation is a technique that utilizes human abilities to perform computation tasks that are difficult for computers to process. Since the concept of human computation was introduced in 2003, many applications have been developed and the output data collected in these applications is very useful for many artificial intelligent applications. In this paper, we categorize existing human computation applications and present their major objectives. This paper gives a better understanding on how human contribute to AI applications.

Keywords: Human computation; survey

Introduction

Human computation comprises computations carried out by humans, where the computation is the mapping process of some input representations to some output representations. As a result of significant advances in computing technology, computers can now solve many problems that previously proved difficult for computer programs. However, many tasks that are trivial for humans continue to challenge even the most sophisticated computer programs. We classify the tasks into three categories:

Annotation

Current computers still have trouble with annotation tasks like reading distorted text or locating a simple object in an image. By contrast, humans can understand and analyze everyday images easily, such as identifying objects in an image and indicating where they are located in the image [1]. Hence, annotation tasks that humans can perform easily are difficult for computer programs.

Possessing the knowledge about the real world

Basic facts about the world that the majority of humans accept as truths, such as “water is liquid,” are called commonsense knowledge. It is impossible for computer programs to have such knowledge without manual input of the relevant facts. Besides, humans can describe physical locations in detail by supplying photographic content, descriptive content, “feelings” and emotional words [2], but computer programs are obviously unable to perform such tasks.

Indicating human preferences

Since human preferences are subjective, computer programs cannot predict them accurately. Human preferences have many practical applications. For example, in the case of image searches, knowing which images are more appealing would allow a search engine to display those images first; and in the field of computer vision, such data could be used to train algorithms that assess the quality of images automatically [3].

Artificial intelligence (AI) attracts many attentions nowadays. Artificial intelligence is the simulation of human intelligence processes by machines, especially for computer systems. To have human knowledge, computer systems usually use models to “learn” from input data without being programmed. These input data (i.e. training data) has to be prepared by human. As a result, human computation applications can output very useful training datasets for AI applications, such as predicting human rating on products.

Overview of Applications

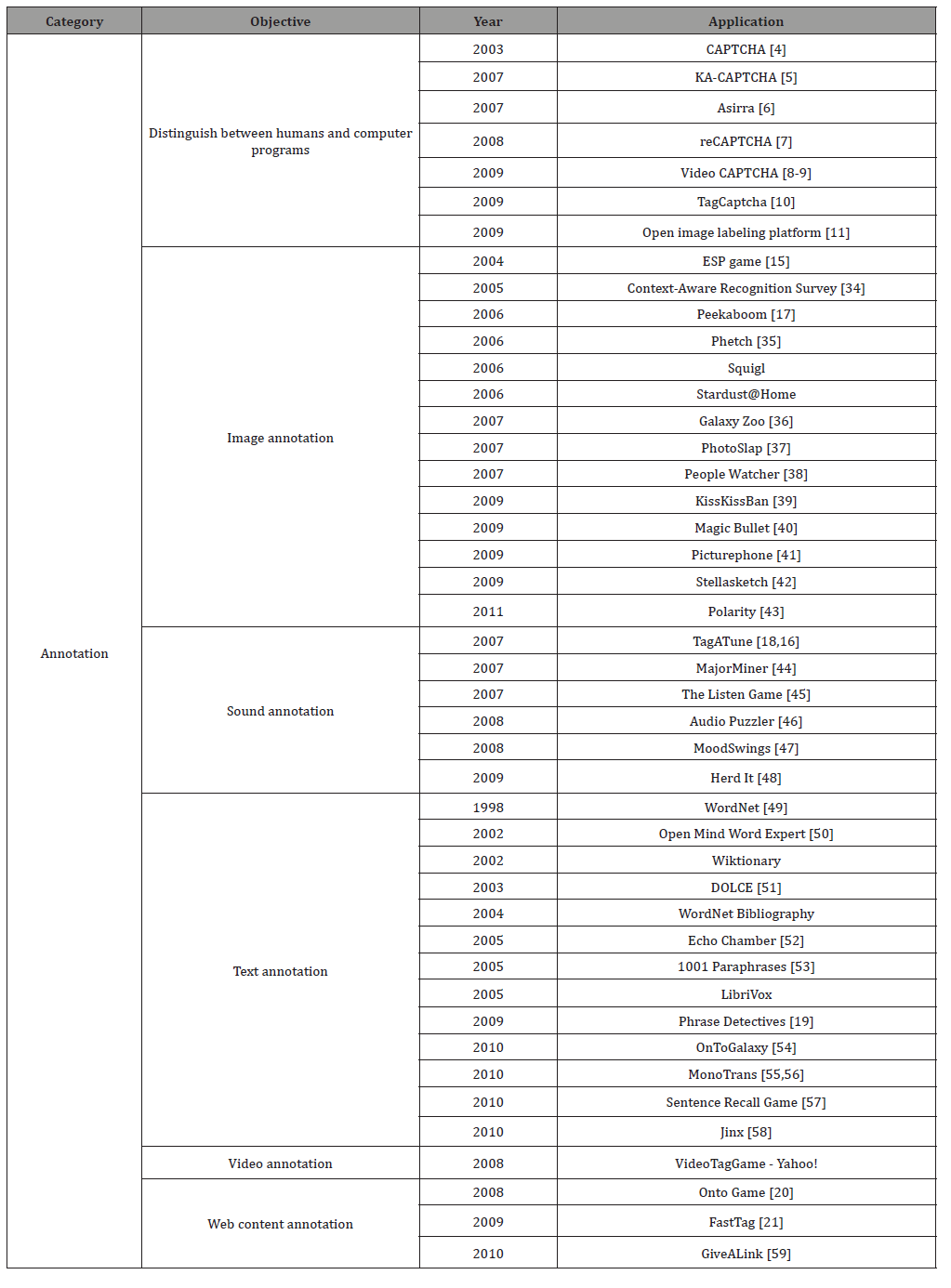

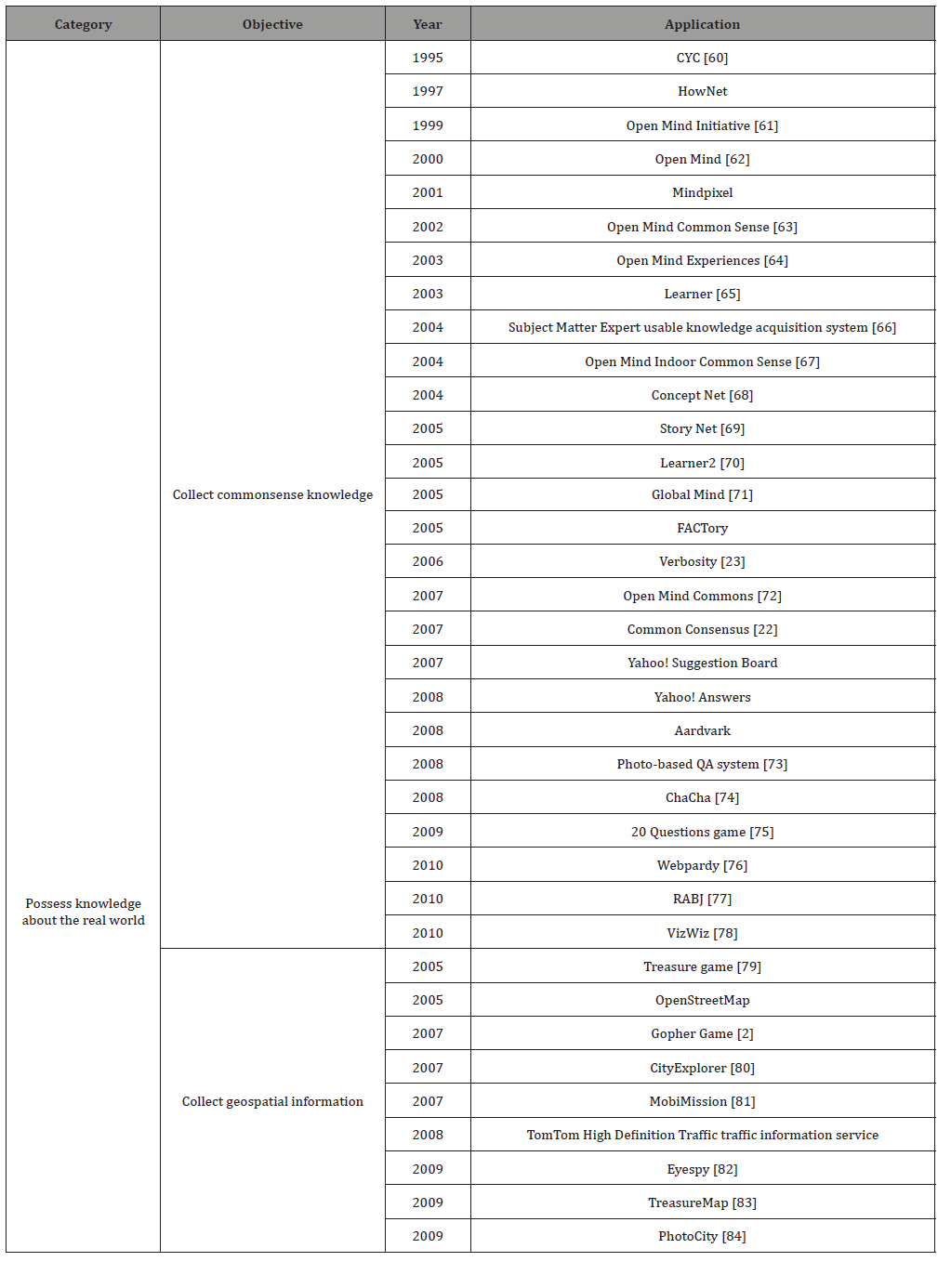

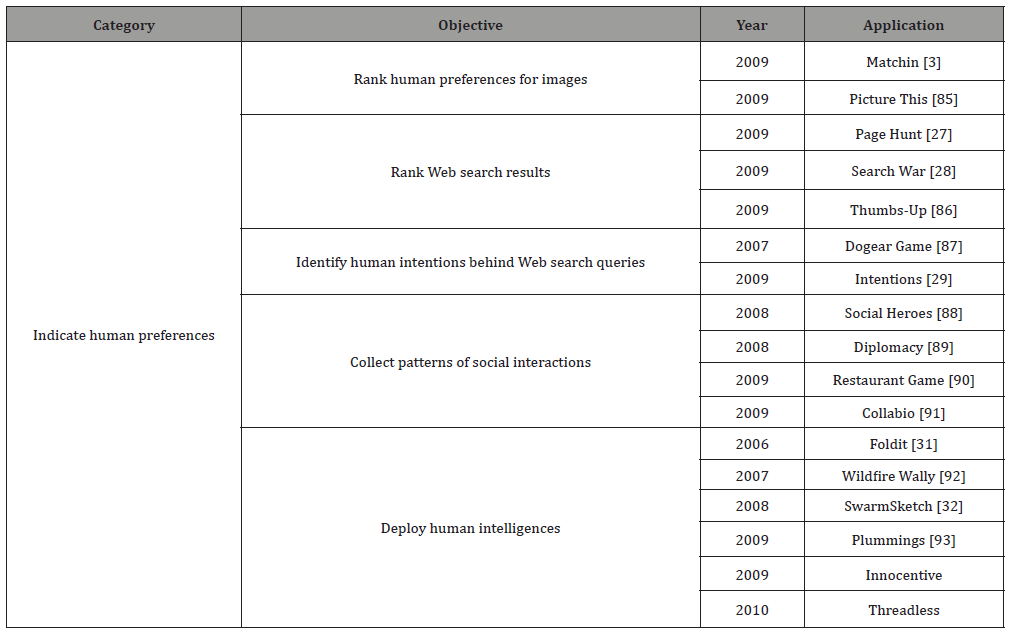

As mentioned before, a large number of human computation applications with different objectives have been proposed in the literature: (1) annotation applications, (2) applications that possess knowledge about the real world, and (3) applications that identify human preferences. In this section, first, we summarize the three types of applications in Table 1, Table 2 and Table 3. Next, we discuss the three types of applications in detail.

Table 1: Development Timeline of Applications for Annotation in Human Computation.

Table 2: Development Timeline of Human Computation Applications that Possess Knowledge about the Real World.

Table 3: Applications that Indicate Human Preferences in Human Computation.

Annotation

Computers can store, and process digital files comprised of various types of data, e.g., text, images, sound clips, videos and web pages. However, computers cannot understand the information stored in the digital files as well as humans because computers lack human intelligence and human abilities, such as basic visual skills for reading distorted text. Table 1 summarizes the annotation applications proposed in the literature.

Distinguishing between humans and computer programs: Nowadays, there is an increasing need to protect online resources on the World Wide Web by differentiating humans from computers. A program that can achieve such differentiation has many security applications, e.g., preventing dictionary attacks in requesting free email services. Modern cryptography has been successful in protecting online resources on the World Wide Web due to the practice of clearly stating on the assumptions under which cryptographic protocols are secure. However, the practice has allowed the rest of the community to evaluate the assumptions and attempt to violate them. Most AI problems have now been stated precisely and publicized; and they have also been solved (take chess as an example) [4]. Therefore, cryptography cannot guarantee failsafe security. However, a human computation system can be used as a test that most humans can pass, but current computer programs cannot pass. CAPTCHA [5] is a computer-generated challengeresponse test that distinguishes humans from computers by using a commonsense problem. CAPTCHAs are widely used to prevent the abuse of online services, such as creating free web hosting accounts. Moreover, a number of hard AI problems, including speech recognition [6] and image understanding [7-12], have been used as the basis for CAPTCHAs. However, many of these methods were found to be inefficient to offer sufficient security since they can be broken by bots [13] or by using Human Computation [14].

Image annotation : Accurate descriptions of images are required by several applications, e.g., image search engines and accessibility programs for the visually impaired. Since annotating images manually is tedious and extremely costly [15], current image annotation methods apply computer vision techniques, most of which rely on machine learning. Specifically, an algorithm is trained to perform a visual task by showing it sample images on which the task has already been performed. However, a major problem with this approach is the lack of training data, which must be prepared by hand. At the moment, only a small portion of items on the Web are tagged [16]. It is necessary to find a way to collect huge numbers of images that are fully annotated with information about the objects in each image, such as the location of each object and the extent of the image that needs to be recognized [17]. A human computation system can provide a way to collect such data. As mentioned earlier, the online ESP game [15] was the first human computation system. Google now uses it as the Google Image Labeler, which collects labels for images on Web. Some web pages also provide image annotation, e.g., Yahoo’s Flickr and the distributed proof-readers project Proofreader.

Sound annotation: with the proliferation of multimedia objects on the Internet, collaborative tagging has emerged as a major strategy for organizing Web content. However, for dynamic multimedia objects, such as sound, music, and video clips, the social tags found online often describe an object as a whole, making it difficult to link tags for specific web content elements. As a result, social tags are unsuitable as data for training algorithms for music and video tagging, which rely on the specific web content elements being tagged (as opposed to the overall content) [16]. A human computation system can collect specific web content elements, such as feelings and preferences, for sound clips from humans. The Tag Tune system [18,16] was the first game to provide annotation for sounds and music to improve audio searches.

Text annotation: Learning a new language is not always easy. In the past, the only way to learn a language was to attend classes, read books and listen to tapes. Nowadays, people can use the Internet to participate in distance learning and read online materials. There is a demand for computer systems that can help people learn different languages in an effective way. Building a language learning system requires a large amount of data that can only be obtained from humans, e.g., identifying the relationships between words and phrases in a short text and annotations of the text. A human computation system can help collect text annotations and the anaphoric relations in a language from humans [19]. Such data can also be used to train anaphora resolution systems, which can be implemented to improve text summarization and search engine indexing. Ultimately, this technology will lead to better Web experiences for Internet users.

Video annotation: People can now record videos easily by using their cell phones or mobile devices. Users of popular video sharing web sites, such as YouTube, contribute millions of tags each year. However, as mentioned earlier, video clip tags often describe the video as a whole, so they cannot be linked to specific content elements. As a result, they are unsuitable as data for training algorithms for video tagging. Yahoo! developed a human computation game called Video-Tag Game to collect specific content elements, such as feelings and preferences for video clips, from humans.

Web content annotation: Making the Semantic Web a reality involves many tasks that even leading-edge computer technologies are unable to perform fully automatically; however, with moderate training, humans can master such tasks. The Semantic Web’s life cycle includes the construction of ontologies, the semantic annotation of data and the alignment of ontologies. Therefore, a large number of people are needed to contribute a substantial amount of effort to generate training sets for semi-automatic approaches. Given the current slow progress, the job would be completed eventually if the ontologies we need and the data to be annotated were static. However, building the Semantic Web is an ongoing challenge because domains and their respective representations change, and therefore require constant maintenance [20]. A human computation system provides a platform for humans to contribute to the Semantic Web’s life cycle. Siorpaes & Hepp [20] applied human computation to ontology alignment and web content annotation for the Semantic Web by using various games, such as Onto Pronto, Spot the Links, and Onto Tube. Krause et al. proposed a binary verification game called Fast Tag [21], which embeds collaborative tagging into on-line games to produce domain specific folksonomies that can be used to filter user preferences or enhance web searches.

Applications that possess the knowledge about the real world

Computer programs do not possess commonsense knowledge about the real world. Such knowledge consists of basic facts that the majority of humans accept as truths about the world, e.g., “milk is white”. Table 2 summarizes the human computation applications that possess knowledge about the real world through human computation.

Collecting commonsense knowledge: A major difference between computers and humans is that computers do not have the vast resources of everyday commonsense knowledge that we humans rely on to solve problems and communicate. Commonsense knowledge, such as “people sleep at night” and “doors can be opened”, seems trivial and implied, but standard computer software lacks knowledge about such fundamental facts. In the AI community, the problem of acquiring this enormous body of knowledge is known as the knowledge acquisition bottleneck [22]. To create truly intelligent systems, the data must be collected from humans [23]. Lieberman et al. developed a number of interactive applications that apply commonsense knowledge [24], such as agents for digital photography [25].

Collecting geospatial information: The rapid increase in mobile phone usage means that mobile computing technologies are now available to an enormous number of people. Global Positioning Systems (GPSs) enable users to pinpoint their physical locations and determine the best route to a destination. However, the descriptive content relevant to the locations can only be collected by humans and this information is very useful to human, for example, finding a good café near a building. OpenStreetMap is a free editable world map compiled by Internet users. It allows users to view, edit and use geographical data in a collaborative way from anywhere on Earth. Besides, the TomTom High Definition Traffic information service enables users to receive most up-to-date traffic information in United Kingdom. Commercial systems like HD-traffic information use coarse location data from cell phones to provide high quality traffic information.

Indicating human preferences

Because human preferences are subjective, they cannot be predicted accurately by computer programs. When choosing between two images, different users might have different preferences. The task cannot be performed by computer programs without inputting data manually to train the computer programs. In this sub-section, we discuss applications that indicate human preferences. Table 3 summarizes the existing applications that indicate human preferences in human computation.

Ranking human preferences for images: Human computation applications are used to collect human preferences from manual input. Eliciting user preferences for large datasets and creating rankings based on those preferences has many practical applications in community-based sites. For example, in the case of image searches, knowing which images are more appealing would allow a search engine to display the more appealing images first. In addition, user preferences provide a measure of user behavior patterns [3]. The first application developed to collect human preferences was the Matchin system [3], which collects human preferences for two images, and then helps the image search engine to rank images based on which ones are the most appealing.

Ranking Web search results: The main function of the World Wide Web is to share information among Internet users, while web search engines help users find required information. Although many works on search engines and optimal rank aggregations are presented in the literature, humans are still needed to produce more relevant rankings. Given two documents, humans are better at ranking their (perceived) relevance than a computer. This is especially true if the ranking involves personal preferences. Moreover, computers (and search engines) employ sophisticated machine learning methods to rank search results, humans can be asked to provide absolute or preference judgments directly, or to provide such judgments indirectly by clicking on the preferred links from the output of search engines. For document relevance evaluation, it is easier for humans to make preference judgments on a pair of documents than make absolute judgments on a single document [26]. As a result, a number of social games elicit data about web pages from players to improve web searching. Games like Page Hunt [27] (a single-player game) and Search War [28] (a two-player competitive online game) seek to learn a mapping from web pages to queries.

Identifying human intentions behind Web search queries: To save time spent on Web searching, there are many social bookmark sites on the Internet, e.g., del.icio.us. Social tags used in the context of a social bookmarking service provide an effective way to improve social navigation. The Dogear Game [76] is an enterprise socialbookmarking system that collects the bookmarks of colleagues in order to accomplish organizational goals. Each player is entertained and learns about his/her colleagues’ bookmarks at the same time. Knowing the intention of a web search query allows researchers to develop more intelligent ways to retrieve relevant search results. Query logs are rich sources of information for analyzing the intent of the most common search queries. Besides the availability of these query logs, researchers still have to label a huge number of search queries manually in order to compile the training data needed to create algorithms for predicting query intent. However, collecting data manually is extremely time-consuming and costly. Law et al. proposed a human computation game called Intentions [29] which collects data about the intentions behind search queries.

Collecting patterns of social interactions: Many new applications, such as medical and gaming applications, rely heavily on the creation of a virtual environment that mimics real-world interactions. Commonsense is an important aspect of virtual reality. Significant resources are required to create realistic scenarios so that commonsense knowledge can be gleaned from the real world. Social data are used to learn behavior models for simulation systems, and the social data must be collected from humans [30]. A number of social games are designed to collect data on the social interactions among humans. The Restaurant Game [8] [9] presents a method of learning human behavior patterns through online gaming. In the game, players collaborate to create a virtual salad by selecting and discussing the available ingredients. The collected data are used to learn behavior models for autonomous social robots.

Deploying human intelligences: Some games enable players to contribute the results of important scientific research. Scientists are being confronted by increasingly complex problems, but current technology is unable to provide solutions. As a result, a research team may not obtain tangible results for weeks, months, or even years. Foldit [31] is a revolutionary new computer game that allows players to assist in predicting protein structures, an important area of biochemistry that seeks to find cures for diseases, by taking advantage of humans’ puzzle-solving intuitive ability. Some crowdsourcing platforms like Thread less, which is a platform for collecting graphic t-shirt designs created by the community members. Although technology has made rapid advances, computers cannot provide innovative or creative ideas in a product design process like humans. Different individuals may create different ideas such as designing a T-shirt [32].

Human Computation for Artificial Intelligence

There are many examples of AI applications which use human input data. In [33], the users are presented with the annotated examples and asked to use them to predict the rating of the target users on other items. In Natural language processing (NLP), a computer system needs to understand and process human language. Most current methods used in NLP are based on machine learning. Examples of NLP tasks are text translation, sentiment analysis, speech recognition, speech-to-text conversion and machine translation. The more human input data in NLP systems have, the more accurate the NLP systems are. As more AI systems will be developed in the future, the need for using human computation applications to collect human data for the training of AI systems will increase too.

Conclusion

This paper gives an overview of the current human computation applications including their objectives and how they work. Human computation tasks are trivial for human but still difficult for current sophisticated computers. As the outputs of human computation tasks are useful for the development of AI systems. We believe human computation is getting more important as well as artificial intelligence in the future.

Acknowledgement

None.

Conflict of Interest

No Conflict of Interest.

References

- von Ahn L (2006) Games with a purpose. Computer pp. 92-94.

- Casey S, Kirman B, Rowland D (2007) The gopher game: a social, mobile, locative game with user generated content and peer review. In ACE ’07: Proceedings of the international conference on Advances in computer entertainment technology. ACM pp. 9-16.

- Hacker S, Von Ahn L (2009) Matchin: eliciting user preferences with an online game. In CHI ’09: Proceedings of the 27th international conference on Human factors in computing systems. ACM pp. 1207-1216.

- von Ahn L, Blum M, Hopper NJ, Langford J (2003) CAPTCHA: Using Hard AI Problems for Security. In EUROCRYPT ’03: Proceedings of the 22nd international conference on Theory and applications of cryptographic techniques. Springer 294-311.

- Da Silva BN, Garcia ACB (2007) Ka-captcha: an opportunity for knowledge acquisition on the web. In AAAI’07: Proceedings of the 22nd national conference on Artificial intelligence. AAAI Press pp. 1322-1327.

- von Ahn L, Maurer B, Mcmillen C, Abraham D, Blum M (2008) reCAPTCHA: Human-Based Character Recognition via Web Security Measures. Science 321(5895): 1465-1468.

- Kluever KA, Zanibbi R (2009) Balancing usability and security in a video captcha. In SOUPS ’09: Proceedings of the 5th Symposium on Usable Privacy and Security. pp. 1-11.

- Yan J, El Ahmad AS (2008b) Usability of captchas or usability issues in captcha design. In SOUPS ’08: Proceedings of the 4th symposium on Usable privacy and security. ACM pp. 44-52.

- Morrison D, Marchand-Maillet S, Bruno E (2009) TagCaptcha: annotating images with CAPTCHAs. In HCOMP ’09: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 44-45.

- Faymonville P, Wang K, Miller J, Belongie S (2009) Captcha-based image labeling on the soylent grid. In HCOMP ’09: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 46-49.

- von Ahn L, Dabbish L (2004) Labeling images with a computer game. In CHI ’04: Proceedings of the SIGCHI conference on Human factors in computing systems. ACM pp. 319-326.

- von Ahn L, Ginosar S, Kedia M, Blum M (2007) Improving Image Search with PHETCH. In ICASSP ’07: Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing. pp. 1209-1212.

- Brockett C, Dolan WB (2005) Echo chamber: A game for eliciting a colloquial paraphrase corpus. In KCVC’05: Proceedings of AAAI 2005 Spring Symposium, Knowledge Collection from Volunteer Contributors. AAAI Press, pp. 21-23.

- Ho CJ, ChanG TH, Hsu JYJ (2007) PhotoSlap: a multi-player online game for semantic annotation. In AAAI’07: Proceedings of the 22nd national conference on Artificial intelligence. AAAI Press.

- Chklovski T (2005) 1001 paraphrases: Incenting responsible contributions in collecting paraphrases from volunteers. In KCVC ’05: Proceedings of AAAI 2005 Spring Symposium, Knowledge Collection from Volunteer Contributors. AAAI Press pp. 16-20.

- Chamberlain J, Poesio M, Kruschwitz U (2009) A demonstration of human computation using the phrase detective’s annotation game. In HCOMP ’09: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 23-24.

- Krause M, Takhtamysheva A, Wittstock M, Malaka R (2010) Frontiers of a paradigm: exploring human computation with digital games. In HCOMP ’10: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM, pp. 22-25.

- Hu C, Bederson BB, Resnik P (2010) Translation by iterative collaboration between monolingual users. In HCOMP ’10: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 39-46.

- Hu C, Bederson BB, Resnik P, Kronrod Y (2011) MonoTrans2: a new human computation system to support monolingual translation. In CHI ’11: Proceedings of the 2011 annual conference on Human factors in computing systems. ACM, pp. 1133-1136.

- Wang J, Yu B (2010) Sentence recall game: a novel tool for collecting data to discover language usage patterns. In HCOMP ’10: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 56-59.

- Seemakurty N, Chu J, Von Ahn L, Tomasic A (2010) Word sense disambiguation via human computation. In HCOMP ’10: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 60-63.

- Siorpaes K, Hepp M (2008) Games with a purpose for the semantic web. IEEE Intelligent Systems 23(3): 50-60.

- Krause M, Aras H (2009) Playful tagging: folksonomy generation using online games. In WWW ’09: Proceedings of the 18th international conference on World Wide Web. ACM pp. 1207-1208.

- Paek T, JU Y, Meek C (2007) People Watcher: A Game for Eliciting Human- Transcribed Data for Automated Directory Assistance. In INTERSPEECH ’07: Proceedings of the 8th Annual Conference of the International Speech Communication Association. ISCA pp. 1322-1325.

- Land K, Slosar A, Lintott C, Andreescu D, Bamford S, et al. (2008) Galaxy zoo: The large-scale spin statistics of spiral galaxies in the sloan digital sky survey. Monthly Notices of the Royal Astronomical Society (MNRAS) 388(4): 1686-1692.

- Weng L, Menczer F (2010) Givealink tagging game: an incentive for social annotation. In HCOMP ’10: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 26-29.

- Lenat DB (1995) CYC: a large-scale investment in knowledge infrastructure. Communications of the ACM 38(11): 33-38.

- Stork DG (1999) The open mind initiative. IEEE Expert Systems and Their Applications 14(3): 19-20.

- Stork DG (2000) Open data collection for training intelligent software in the open mind initiative. In EIS ’00: Proceedings of the Engineering Intelligent Systems Symposium. pp. 1-7.

- Singh P (2002) The public acquisition of commonsense knowledge. In Proceedings of AAAI Spring Symposium: Acquiring (and Using) Linguistic (and World) Knowledge for Information Access. AAAI Press.

- Singh P, Barry B (2003) Collecting commonsense experiences. In K-CAP ’03: Proceedings of the 2nd international conference on Knowledge capture. ACM pp. 154-161.

- Chklovski T (2003a) Learner: a system for acquiring commonsense knowledge by analogy. In K-CAP ’03: Proceedings of the 2nd international conference on Knowledge capture. ACM pp. 4-12.

- Belasco A, Curtis J, Kahlert RC, Klein C, Mayans C, et al. (2004) Representing knowledge gaps effectively. In PAKM ’04: Proceedings of the 5th international conference on Practical Aspects of Knowledge Management. Springer pp. 2-3.

- Gupta R, Kochenderfer MJ (2004) Common sense data acquisition for indoor mobile robots. In AAAI’04: Proceedings of the 19th national conference on Artifical intelligence. AAAI Press pp. 605-610.

- Liu H, Singh P (2004) Commonsense reasoning in and over natural language. In KES ’04: Proceedings of the 8th International Conference on Knowledge-Based Intelligent Information and Engineering Systems. Springer.

- Ho CJ,Chen KT (2009) On formal models for social verification. In HCOMP ’09: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 62-69.

- Williams R, Barry B, Singh P (2005) Comickit: acquiring story scripts using common sense feedback. In IUI’05: Proceedings of the 10th international conference on Intelligent user interfaces. ACM pp. 302-304.

- Chklovski T (2005) Designing interfaces for guided collection of knowledge about everyday objects from volunteers. In IUI ’05: Proceedings of the 10th international conference on Intelligent user interfaces. ACM pp. 311-313.

- Chung H (2006) Globalmind - bridging the gap between different cultures and languages with common-sense computing. M.S. thesis, Massachusetts Institute of Technology.

- von Ahn L, Kedia M, Blum M (2006) Verbosity: a game for collecting common- sense facts. In CHI ’06: Proceedings of the SIGCHI conference on Human Factors in computing systems. ACM pp.75-78.

- Speer R (2007) Open Mind Commons: An Inquisitive Approach to Learning Common Sense. In Proceedings of Workshop on Common Sense and Intelligent User Interfaces.

- Yan J, Yu SY (2009) Magic bullet: a dual-purpose computer game. In HCOMP ’09: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 32-33.

- Johnson G (2009) Picturephone: A game for sketch data capture. In IUI ’09: Proceedings of Workshop on Sketch Recognition.

- Lieberman H, Smith D, Teeters A (2007) Common Consensus: a webbased game for collecting commonsense goals. In IUI ’07: Proceedings of Workshop on Common Sense for Intelligent Interfaces, ACM Conference Intelligent User Interfaces. ACM.

- Yeh T, Lee JJ, Darrell T (2008) Photo-based question answering. In MM ’08: Proceeding of the 16th ACM international conference on Multimedia. ACM pp. 389-398.

- Chen M, Hearsty M, Hong J, Lin J (1999) Cha-cha: a system for organizing intranet search results. In USITS’99: Proceedings of the 2nd conference on USENIX Symposium on Internet Technologies and Systems. USENIX Association pp. 5-5.

- Speer R, Krishnamurthy J, Havasi C, Smith D, Lieberman H, et al. (2009) An interface for targeted collection of common-sense knowledge using a mixture model. In IUI ’09: Proceedings of the 13th international conference on Intelligent user interfaces. ACM pp. 137-146.

- Aras H, Krause M, Haller A, Malaka R (2010) Webpardy: harvesting QA by HC. In HCOMP ’10: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 49-52.

- Kochhar S, Mazzocchi S, Paritosh P (2010) The anatomy of a large-scale human computation engine. In HCOMP ’10: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 10-17.

- Bigham JP, Jayant C, Ji H, Little G, Miller A, et al. (2010) VizWiz: nearly real-time answers to visual questions. In W4A ’10: Proceedings of the 2010 International Cross Disciplinary Conference on Web Accessibility. ACM pp. 333-342.

- Barkhuus L, Chalmers M, Tennent P, Hall M, Bell M, et al. (2005) Picking pockets on the lawn: The development of tactics and strategies in a mobile game. In UbiComp ’05: Proceedings of the 7th International Conference on Ubiquitous Computing. Springer, pp. 358-374.

- Matyas S (2007) Playful geospatial data acquisition by location-based gaming communities. The International Journal of Virtual Reality 6(3): 1-10.

- Grant L, Daanen H, Benford S, Hampshire A, Drozd A, et al. (2007) Mobimissions: the game of missions for mobile phones. In SIGGRAPH ’07: ACM SIGGRAPH 2007 educators’ program. ACM 12.

- Bell M, Reeves S, Brown B, Sherwood S, Macmillan D, et al. (2009) Eyespy: supporting navigation through play. In CHI ’09: Proceedings of the 27th international conference on Human factors in computing systems. ACM, pp.123-132.

- Arase Y, Xie X, Duan M, Hara T and Nishio S (2009) A game-based approach to assign geographical relevance to web images. In WWW ’09: Proceedings of the 18th international conference on World Wide Web. ACM pp. 811-820.

- Tuite K, Snavely N, Hsiao DY, Smith AM, Popovi C Z (2010) Reconstructing the world in 3D: bringing games with a purpose outdoors. In FDG ’10: Proceedings of the 5th International Conference on Foundations of Digital Games. ACM pp. 232-239.

- Law E, Settles B, Snook A, Surana H, Von Ahn L, et al. (2011) Human computation for attribute and attribute value acquisition. In CVPR ’11: Proceedings of IEEE Computer Vision and Pattern Recognition 2011, Workshop on Fine-Grained Visual Categorization. IEEE Computer Society.

- Bennett PN, Chickering DM and Mityagin A (2009) Picture this: preferences for image search. In HCOMP’09: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 25-26.

- Johnson G and Do EYL (2009) Games for sketch data collection. In SBIM ’09: Proceedings of the 6th Euro graphics Symposium on Sketch-Based Interfaces and Modeling. ACM pp. 117-123.

- Ma H, Chandrasekar R, Quirk C and Gupta A (2009) Page hunt: using human computation games to improve web search. In HCOMP ’09: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 275-284.

- Law E, Von Ahn L and Mitchell T (2009) Search war: a game for improving web search. In HCOMP ’09: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 31-31.

- Dasdan A, Drome C, Kolay S, Alpern M, Han A, et al. (2009) Thumbs-up: a game for playing to rank search results. In HCOMP ’09: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 36-37.

- Dugan C, Muller M, Millen DR, Geyer W, Brownholtz B (2007) The dogear game: a social bookmark recommender system. In GROUP ’07: Proceedings of the 2007 international ACM conference on Supporting group work. ACM pp. 387-390.

- Law E, Mityagin A, Chickering M (2009) Intentions: a game for classifying search query intent. In CHI ’09: Proceedings of the 27th international conference extended abstracts on Human factors in computing systems. ACM pp. 3805-3810.

- Simon A (2008) Social heroes: games as APIs for social interaction. In DIMEA ’08: Proceedings of the 3rd international conference on Digital Interactive Media in Entertainment and Arts. ACM pp. 40-45.

- Krzywinski A, Chen W, Helgesen A (2008) Agent architecture in social games - the implementation of subsumption architecture in diplomacy. In AIIDE ’08: Proceedings of the 4th Artificial Intelligence and Interactive Digital Entertainment Conference. AAAI Press pp. 191-196.

- Orkin J and Roy D (2007) The restaurant game: Learning social behavior and language from thousands of players online. Journal of Game Development 3(1): 39-60.

- Bernstein M, Tan D, Smith G, Czerwinski M, Horvitz E, (2009) Collabio: a game for annotating people within social networks. In UIST ’09: Proceedings of the 22nd annual ACM symposium on User interface software and technology. ACM pp. 97-100.

- Cooper S, Khatib F, Makedon I, Lu H, Barbero J, et al, (2011). Analysis of social gameplay macros in the Foldit cookbook. In FDG ’11: Proceedings of the Sixth International Conference on the Foundations of Digital Games. ACM pp. 9-14.

- Peck E, Riolo M and Cusack C (2007) Wildfire wally: a volunteer computing game. In Future Play ’07: Proceedings of the 2007 conference on Future Play. ACM pp. 241-242.

- Brabham DC (2008) Crowdsourcing as a model for problem solving: An introduction and cases. Convergence: The International Journal of Research into New Media Technologies 14(1): 75-90.

- Terry L, Roitch V, Tufail S, Singh K, Taraq O, et al. (2009) Harnessing Human Computation Cycles for the FPGA Placement Problem. In ERSA. CSREA Press pp. 188-194.

- Turnbull D, Liu R, Barrington L, Lanckriet G (2007) A game-based approach for collecting semantic annotations of music. In ISMIR ’07: Proceedings of the 8th International Conference on Music Information Retrieval.

- Diakopoulos N, Luther K and Essa I (2008) Audio puzzler: piecing together time-stamped speech transcripts with a puzzle game. In MM ’08: Proceeding of the 16th ACM international conference on Multimedia. ACM pp. 865-868.

- Chan TY (2003) Using a text-to-speech synthesizer to generate a reverse turing test. In ICTAI ’03: Proceedings of the 15th IEEE International Conference on Tools with Artificial Intelligence. IEEE Computer Society 226.

- Zhang J, and Wang X (2010) Breaking Internet Banking CAPTCHA Based on Instance Learning. In ISCID’10: Proceedings of the 2010 International Symposium on Computational Intelligence and Design - Volume 01. IEEE Computer Society pp. 39-43.

- Kim YE, Schmidt E, Emelle L (2008) Moodswings: A collaborative game for music mood label collection. In ISMIR ’08: Proceedings of the 2008 International Conference on Music Information Retrieval. pp. 231-236.

- Egele M, Bilge L, Kirda E, Kruegel C (2010) CAPTCHA smuggling: hijacking web browsing sessions to create CAPTCHA farms. In SAC’10: Proceedings of the 2010 ACM Symposium on Applied Computing. ACM pp. 1865-1870.

- Lieberman H, Liu H, Singh P, Barry B (2004) Beating common sense into interactive applications. AI Magazine25 63-76.

- Liu H, Lieberman H (2002) Robust photo retrieval using world semantics. In LREC ’02: Proceedings of Workshop on Creating and Using Semantics for Information Retrieval and Filtering: State-of-the-art and Future Research. LREC Press pp.15-20.

- Carterette B, Bennett PN, Chickering DM, Dumais ST (2008) Here or there: preference judgments for relevance. In ECIR’08: Proceedings of the IR research, 30th European conference on Advances in information retrieval. Springer-Verlag, pp. 16-27.

- Fellbaum C (1998) WordNet: An Electronical Lexical Database. The MIT Press, Cambridge, MA.

- Alonso JB, Chang A, Orkin J, Breazeal C (2009) Eliciting collaborative social interactions via online games. In IUI ’09: Proceedings of Intelligent User Interfaces Workshop. ACM pp. 1-5.

- Chklovski T, Mihalcea R (2002) Building a sense tagged corpus with open mind word expert. In Proceedings of the ACL-02 workshop on Word sense disambiguation. Association for Computational Linguistics, pp. 116-122.

- Organisciak P, Teevan J, Dumais S, Miller RC, Kalai AT (2013) Personalized Human Computation. In First AAAI Conference on Human Computation and Crowdsourcing.

- Elson J, Douceur JR, Howell J, Saul J (2007) Asirra: a captcha that exploits interest-aligned manual image categorization. In CCS ’07: Proceedings of the 14th ACM conference on Computer and communications security. ACM pp. 366–374.

- Wilson DH, Long AC, Atkeson C (2005) A context-aware recognition survey for data collection using ubiquitous sensors in the home. In CHI EA ’05: Proceedings of the 23th international conference extended abstracts on Human factors in computing systems. ACM.

- von Ahn L, Liu R, Blum M (2006) Peekaboom: a game for locating objects in images. In CHI ’06: Proceedings of the SIGCHI conference on Human Factors in computing systems. ACM pp. 55–64.

- Law E L M, von Ahn L, Dannenberg RB, Crawford M (2007) TagATune: A Game for Music and Sound Annotation. In ISMIR ’07: Proceedings of the 8th International Conference on Music Information Retrieval. pp. 361–364.

- Law E, von Ahn L (2009) Input-agreement: a new mechanism for collecting data using human computation games. In CHI ’09: Proceedings of the 27th international conference on Human factors in computing systems. ACM pp. 1197-1206.

- Mandel M, Ellis D (2007) A web-based game for collecting music metadata. In ISMIR ’07: Proceedings of the 8th International Conference on Music Information Retrieval.

- Barrington L, O’malley D, Turnbull D, Lanckriet G (2009) User-centered design of a social game to tag music. In HCOMP ’09: Proceedings of the ACM SIGKDD Workshop on Human Computation. ACM pp. 7-10.

- Gangemi A, Guarino N, Masolo C, Oltramari A (2003) Sweetening wordnet with dolce. AI Magazine 24(3): 13-24.

-

Man-Ching Yuen. A Study of Human Computation Applications. Annal Biostat & Biomed Appli. 1(1): 2018. ABBA.MS.ID.000504.

-

Acoustic radiation force, Chronic liver disease, clinical trajectory, etiology, decompensation, Liver fibrosis, obesity, Hepatitis C, Liver biopsy, Liver histology, Statistical, Analysis, Ethics, Median velocity, Liver injury

-

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.